Your developers are completing 21% more tasks. They’re merging 98% more pull requests. By any measure, AI coding assistants are boosting individual productivity.

But your DORA metrics? They haven’t budged. Lead time, deployment frequency, change failure rate – all flat.

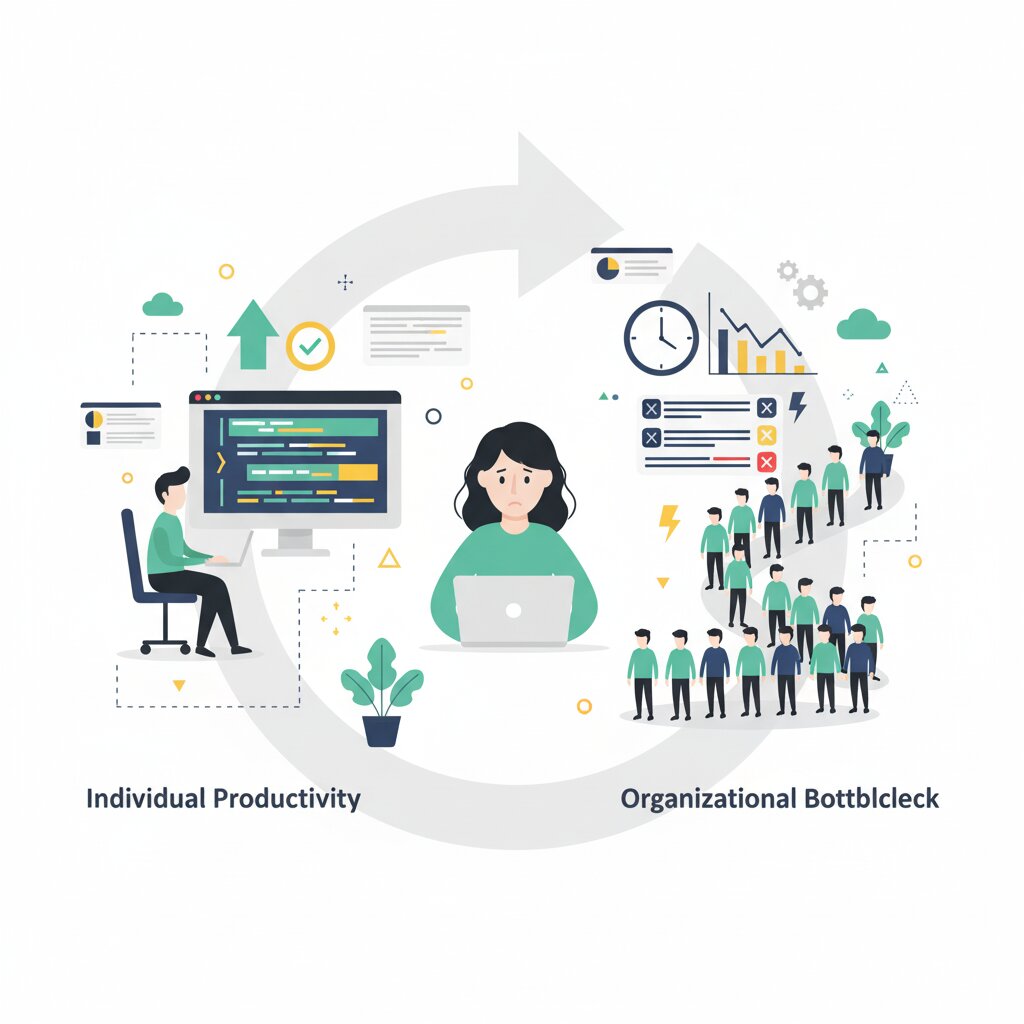

Here’s what’s happening: that 91% increase in PR review time is creating a bottleneck that swallows every individual gain. AI accelerates code generation, but review capacity stays constant. This mismatch means individual velocity gains evaporate before reaching production.

Understanding this mismatch is the first step to redesigning workflows that actually unlock organisational AI value. And that’s what you need to prove ROI and scale your team effectively.

What is the AI Productivity Paradox?

The AI coding productivity paradox manifests most clearly at the organisational level. AI coding assistants dramatically boost what individual developers can get done. Developers using AI tools complete 21% more tasks and merge 98% more pull requests. They touch 47% more PR contexts daily.

Yet organisational delivery metrics show no measurable business impact. DORA metrics – lead time, deployment frequency, change failure rate, MTTR – remain unchanged despite AI adoption.

Why does this matter? Individual velocity gains evaporate before reaching production. You’re creating invisible waste.

It’s Amdahl’s Law in action. Systems move only as fast as their slowest link. When AI accelerates code generation but downstream processes can’t match that velocity, the whole system slows to the bottleneck.

Faros AI’s telemetry analysis of over 10,000 developers found this paradox consistently. The DORA Report 2025 survey of nearly 5,000 developers confirms it. Stanford and METR research shows developers are poor estimators of their own productivity – they feel faster without being faster.

Here’s the reality: developers conflate “busy” with “productive.” Activity in the editor feels like progress. But that feeling doesn’t translate to features shipped or value delivered.

Why Does PR Review Time Increase 91% with AI Adoption?

The 91% increase in PR review time is the constraint preventing organisational gains.

The root cause is simple. PRs are 154% larger on average because AI generates more code per unit of time. If developers produce 98% more PRs and each is 154% larger, review workload explodes.

Senior engineers face this cognitive overload daily. They’re reviewing substantially more code with the same hours available. Code review is fundamentally human work that doesn’t scale with AI acceleration.

Here’s the “thrown over the wall” pattern in practice: developers complete work faster but create massive queues at the review stage. Your most experienced developers become the bottleneck because they have the system knowledge and architectural judgement needed.

Quality concerns compound this burden. AI adoption is consistently associated with a 9% increase in bugs per developer. These quality issues compound the review burden, as more bugs mean reviewers need to check more thoroughly, which takes more time per PR.

Walk through the math. Your developers produce 98% more PRs. Each PR is 154% larger. That’s roughly 5x the review workload hitting the same review capacity. Something has to give.

Review throughput now defines the maximum velocity your organisation can sustain. Speed up code generation all you want – if review can’t keep pace, you’ve just moved the bottleneck, not eliminated it.

What Causes the Throughput-Review Mismatch?

The throughput-review mismatch is a structural imbalance. AI accelerates individual code generation but review capacity remains constant.

Code must be reviewed before merging. That’s a sequential dependency you can’t eliminate.

AI changes how developers operate. They’re touching 9% more task contexts and 47% more pull requests daily. They’re orchestrating AI agents across multiple workstreams.

Here’s the amplification effect. A small productivity gain per developer multiplied across your team creates an overwhelming review queue. Ten developers each producing 98% more PRs means roughly 20 times the original PR volume hitting your review process.

When developers interact with 47% more pull requests daily, reviewers spread themselves thinner across more change contexts. That fragmented attention slows review velocity.

Simply hiring more reviewers doesn’t solve this. It’s a workflow architecture issue, not a capacity issue. Without lifecycle-wide modernisation, AI’s benefits evaporate at the first bottleneck.

How Does AI Amplify Existing Team Strengths and Weaknesses?

AI acts as an amplifier. High-performing organisations see their advantages grow, while struggling ones find their dysfunctions intensified.

High-performing organisations have strong version control, quality platforms, small batch discipline, and healthy data ecosystems. AI amplifies these advantages.

Struggling organisations have poor documentation, weak testing, and ad-hoc processes. AI amplifies these weaknesses too. Individual productivity increases get absorbed by downstream bottlenecks.

Most organisations haven’t built the capabilities that AI needs yet. AI adoption recently reached critical mass – over 60% weekly active users – but supporting systems remain immature.

Surface-level adoption doesn’t help. Most developers only use autocomplete features. Advanced capabilities remain largely untapped.

You have roughly 12 months to shift from experimentation to operationalisation. Early movers are already seeing organisational-level gains translate to business outcomes.

What is the 70% Problem and Why Does It Matter?

The 70% problem refers to what happens after AI generates the first 70% of a solution – the remaining 30% of work proves deceptively difficult.

That final 30% includes production integration, authentication, security, API keys, edge cases, and debugging. AI struggles with this work because it requires deep system context and judgement about trade-offs.

Here’s what “vibe coding” looks like. You focus on intent and system design, letting AI handle implementation details. It’s AI-assisted coding where you “forget that the code even exists”. This works for prototypes and MVPs. It’s problematic for production systems.

The “two steps back” anti-pattern emerges when developers don’t understand generated code. They use AI to fix AI’s mistakes, creating a degrading loop where each fix creates 2-5 new problems.

Context engineering is the bridge from “prompting and praying” to effective AI use. It means providing AI tools with optimal context – system instructions, documentation, codebase information.

New features progressively took longer to integrate in vibe coding experiments. The result was a monolithic architecture where backend, frontend, data access layer, and API integrations were tightly coupled.

For early-stage builds, MVPs, and internal tools, vibe coding is effective. For everything else, you need to blend it with rigour.

Why Don’t DORA Metrics Improve Despite Individual AI Gains?

DORA metrics measure end-to-end delivery capability. That’s why they’re the right metrics – they measure what matters for business outcomes.

Lead time includes the review bottleneck. Faster code generation doesn’t reduce lead time if PRs sit in review queues for 91% longer.

Deployment frequency is limited by downstream processes. Deployment stayed flat in many high-AI teams because they still deployed on fixed schedules.

Change failure rate is affected by that 9% bug increase. More bugs per developer means more failed changes reaching production.

MTTR requires system understanding. AI-generated code that developers don’t fully understand takes longer to debug when it breaks.

Activity is up. Outcomes aren’t.

Measurement challenges make this worse. Most organisations lack baseline data from before AI adoption. Self-report bias inflates perceived gains. The productivity placebo effect creates a gap between perception and reality.

Developers felt faster with AI assistance despite taking 19% longer in controlled studies. That’s why measuring AI coding ROI requires objective telemetry rather than developer perception—individual metrics can mislead when they don’t account for downstream bottlenecks.

Telemetry platforms like Faros AI and Swarmia integrate source control, CI/CD, and incident tracking to show objective reality versus developer perception.

How Can You Redesign Workflows to Prevent Review Bottlenecks?

Adding AI to existing workflows creates bottlenecks. You must restructure processes.

AI-assisted code review scales review capacity by using AI tools to review AI-generated code. AI provides an initial review to catch obvious issues before human review. Use AI to handle routine checks while humans focus on high-value review – architecture, business logic, security implications, maintainability.

Small batch discipline maintains incremental change discipline despite AI’s ability to generate large code volumes. AI makes it easy to generate massive changes. Don’t let it. Enforce work item size limits. Smaller changes are easier to review, less risky to deploy, and faster to debug.

Batch processing strategies group similar reviews into dedicated review time blocks. Set up async review patterns that don’t interrupt flow. Use intelligent routing to send complex architectural changes to senior developers while directing routine updates to appropriate reviewers.

Lifecycle-wide modernisation scales all downstream processes – testing, CI/CD, deployment – to match AI-driven velocity. Organisations already investing in platform engineering are better positioned for AI adoption. The same self-service capabilities and automated quality gates that help human teams scale work just as well for managing AI-generated code.

What Organisational Capabilities Must Exist Before AI Creates Value?

Some organisations unlock value from AI investments while others waste them. The difference is readiness.

The seven DORA capabilities that determine AI success:

Clear AI stance: documented policies on permitted tools and usage.

Healthy data ecosystems: high quality internal data that’s accessible and unified rather than siloed.

AI-accessible internal data: company-specific context for AI tools, not just generic assistance.

Strong version control: mature development workflows and robust rollback capabilities.

Small batches: incremental change discipline, not oversized PRs.

User-centric focus: accelerated velocity that maintains focus on user needs.

Quality internal platforms: self-service capabilities, quick-start templates, and automated quality gates.

Platform foundations matter. Quality internal platforms enable self-service. Documentation supports context engineering. Data ecosystems feed AI tools with organisational context.

Consider AI-free sprint days – dedicated periods without AI to prevent skills erosion and maintain code understanding. When developers don’t understand generated code, they can’t effectively debug it, review it, or extend it.

In most organisations, AI usage is still driven by bottom-up experimentation with no structure, training, or best practice sharing. That’s why gains don’t materialise.

Returning to the paradox explained in the research, workflow design matters more than tool adoption. Individual gains disappear at the organisational level not because AI tools fail, but because organisations fail to redesign workflows that unlock those gains.

FAQ

How long does it take for organisational AI productivity gains to appear?

Most organisations reached critical mass adoption (60%+ weekly active users) in the last 2-3 quarters, but supporting systems remain immature. Organisations with strong foundational capabilities see gains within 6-12 months. Those without may never see organisational improvements despite individual gains. The key factor is workflow redesign timeline, not AI adoption timeline.

Can we measure AI productivity if we don’t have baseline data from before AI adoption?

Yes, though it’s challenging. Focus on leading indicators like PR review time, PR size distribution, and code review queue depth rather than trying to compare before/after productivity. Telemetry platforms like Faros AI and Swarmia can track these metrics going forward. Also measure outcome metrics (DORA metrics, feature delivery time) which matter more than activity metrics.

Should we restrict AI tool usage to prevent the review bottleneck problem?

No. Restricting tools treats the symptom rather than the cause. The bottleneck stems from workflow design that can’t handle increased velocity, not from AI tools themselves. Focus on redesigning review processes, implementing AI-assisted reviews, and maintaining small batch discipline. Restrictions will frustrate developers and lose competitive advantage.

Why do developers feel more productive with AI even when organisational metrics don’t improve?

The productivity placebo effect. Instant AI feedback loops create dopamine reward cycles and activity in the editor feels like progress. Developers conflate “busy” with “productive.” Stanford and METR research shows developers consistently overestimate their own productivity gains because they lack visibility into downstream bottlenecks where value evaporates.

How do we prevent senior engineers from burning out reviewing AI-generated code?

Three approaches: (1) Implement AI-assisted code review to scale review capacity, (2) Enforce small batch discipline to prevent 154% PR size increases, (3) Create tiered review processes where AI-generated code gets different review depth than human-written code based on risk. Also ensure review work is recognised and rewarded in performance evaluations.

Is the solution to hire more senior engineers to handle increased review load?

No. This addresses capacity but not the underlying workflow architecture problem. Adding reviewers helps short-term but doesn’t scale – you’d need to grow review capacity exponentially as AI adoption increases. Must redesign workflows to handle AI-driven velocity structurally through AI-assisted reviews, batch processing, and lifecycle-wide process improvements.

What’s the difference between “vibe coding” and effective AI-assisted development?

Vibe coding prioritises speed and exploration over correctness and maintainability – excellent for prototypes/MVPs but problematic for production. Effective AI-assisted development involves context engineering (providing AI optimal context), code understanding (not blindly accepting suggestions), and handling the 70% problem (integration, security, edge cases). The difference is intentionality and comprehension.

How do we maintain code quality with 9% more bugs per developer using AI?

Implement context-aware testing where tests serve as both safety nets and context for AI agents. Strengthen quality gates and don’t let review bottlenecks pressure teams to skip thorough reviews. Use AI to help write tests, not just implementation code. Ensure developers understand generated code well enough to spot issues – consider AI-free sprint days to maintain skills.

Should different team archetypes adopt AI differently?

Yes. DORA identifies seven team archetypes with different AI adoption patterns. High-performing teams with strong capabilities can adopt advanced AI features quickly. Teams with weak foundations should focus on building organisational capabilities first – documentation, version control, testing infrastructure, platform quality – before pushing AI adoption, or risk amplifying existing dysfunctions.

What’s the first step to unlock organisational AI value?

Assess your current state against the seven DORA capabilities and five AI enablers frameworks. Identify your biggest constraint – usually workflow design that can’t handle increased velocity, followed by infrastructure that can’t scale, then governance gaps. Address foundational issues before pursuing advanced AI capabilities. Start with small batch discipline and AI-assisted reviews to prevent review bottlenecks.

How do we prevent the “two steps back” pattern where fixing AI bugs creates more problems?

This pattern signals developers don’t understand the generated code and are using AI to fix AI’s mistakes. Solutions: (1) Mandate code review and explanation of AI suggestions before accepting, (2) Implement context engineering to provide AI better context upfront, (3) Use AI-free sprint days to maintain fundamental coding skills, (4) Create learning culture where understanding code is valued over speed of generation.

Can AI tools review their own generated code effectively?

Limited effectiveness. AI can catch syntactic issues, basic logic errors, and style violations, but lacks the system context, business logic understanding, and architectural judgement that human reviewers provide. AI-assisted review is most effective when AI handles routine checks (formatting, common patterns) while humans focus on high-value review (architecture, business logic, security implications, maintainability).