The sidecar pattern has emerged as a cornerstone architectural approach for organisations modernising their infrastructure through container orchestration. By deploying auxiliary containers alongside main applications within Kubernetes pods, this pattern addresses the persistent challenge of managing cross-cutting concerns without compromising application code integrity.

This architectural strategy enables enterprise-scale deployment of consistent operational capabilities across heterogeneous microservices landscapes. The core advantage lies in modularity – operational logic like logging, metrics collection, and proxying runs in separate containers, allowing applications to focus purely on business responsibilities.

For technical leaders evaluating infrastructure modernisation strategies, the sidecar pattern represents a pragmatic solution to operational complexity. This pattern is part of the essential microservices design patterns that reduce development overhead whilst providing the architectural flexibility necessary for sustainable enterprise growth in cloud-native environments.

What is the Sidecar Pattern and Why Should CTOs Care?

The sidecar pattern deploys auxiliary containers alongside main application containers within Kubernetes pods, handling cross-cutting concerns like logging, monitoring, and security without modifying application code. This architectural approach reduces development overhead while enabling consistent operational capabilities across microservices at enterprise scale.

The pattern involves co-locating operational tasks with primary applications in separate containers, providing isolation whilst creating homogeneous interfaces across programming languages. Cross-cutting tasks can scale across multiple instances, reducing duplicate functionality deployment.

Sidecars operate independently from primary applications in terms of runtime environment and programming language, eliminating the need for separate operational components for each technology stack. This enables incremental modernisation whilst maintaining existing investment protection.

How Do Sidecar Containers Share Resources with Main Applications?

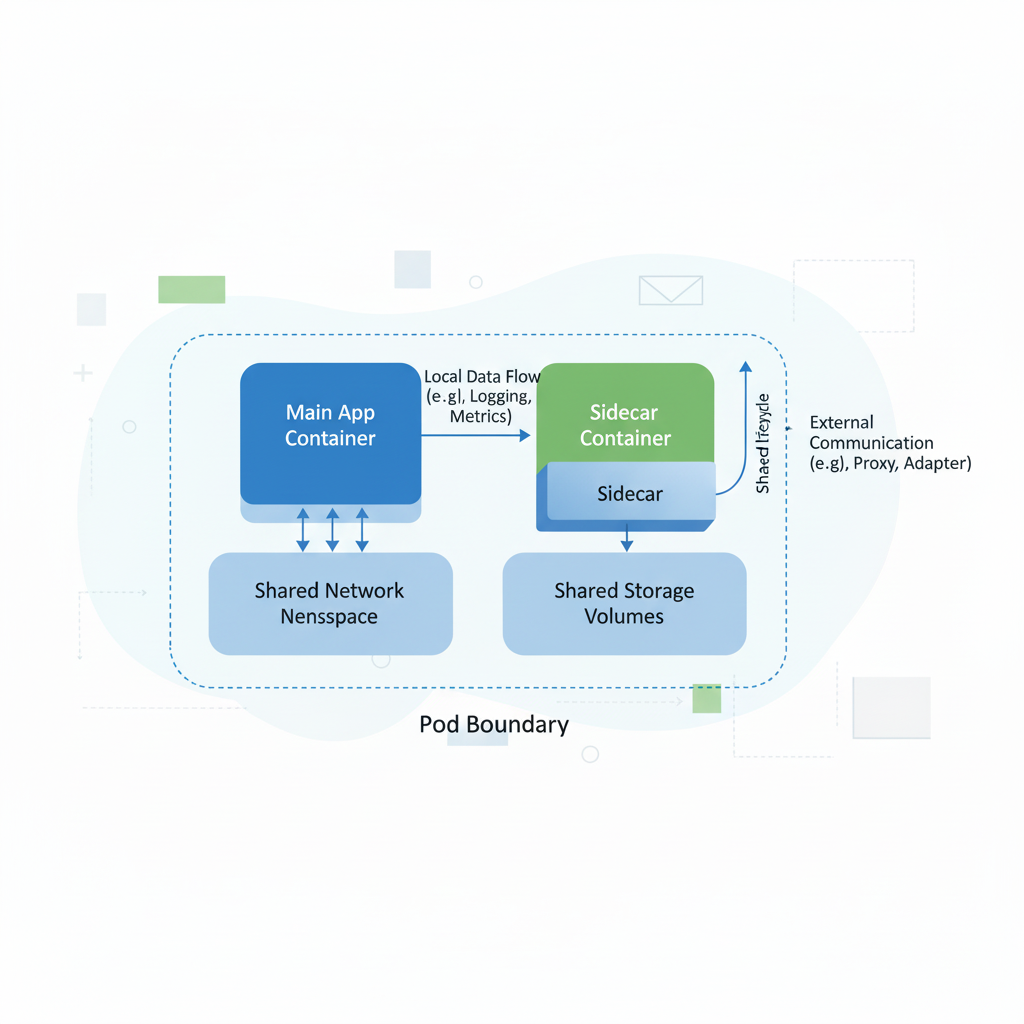

Sidecar containers share network namespace, storage volumes, and pod lifecycle with main application containers in Kubernetes. They communicate through localhost interfaces, shared file systems, or Unix domain sockets, enabling tight integration for operational tasks while maintaining process isolation and independent resource allocation for performance optimisation.

The shared network namespace creates tight integration opportunities, enabling comprehensive monitoring and seamless traffic management. Application containers can send HTTP traffic to localhost addresses where proxy sidecars listen, whilst logging sidecars can read application logs from shared volumes.

The pod lifecycle governs all containers, with sidecars starting alongside applications and terminating gracefully after main containers shut down. This coordination allows essential tasks like log flushing and connection draining to complete properly.

Although sidecars share pods, Kubernetes schedules CPU and memory resources per container, enabling fine-tuned resource limits independent of main applications. This granular control prevents resource contention whilst supporting diverse operational requirements.

When Should You Choose Sidecar Pattern Over DaemonSets or Init Containers?

Use sidecar containers for application-specific operational tasks requiring tight coupling with individual services. Choose DaemonSets for node-level infrastructure services like monitoring agents across all pods. Select init containers for one-time pre-startup tasks like database migrations. Sidecars excel when operational functionality needs per-application customisation and ongoing lifecycle management.

The architectural decision between these deployment patterns hinges on operational scope and lifecycle requirements. Sidecars and init containers both run within pods but serve distinct purposes – init containers handle setup tasks that must complete before main applications start, whilst sidecars run concurrently throughout the application lifecycle.

Use this pattern when primary applications use heterogeneous languages and frameworks, when components are owned by different teams, or when fine-grained resource control is needed. Consider whether functionality would work better as a separate service before choosing sidecars, as interprocess communication includes latency overhead.

DaemonSets better serve infrastructure-wide operational needs, whilst sidecars excel at service-specific requirements. This distinction guides architectural decisions based on operational scope and resource allocation strategies.

What Are the Resource Allocation Best Practices for Sidecar Containers?

Set conservative CPU requests (50-100m) and memory limits (64-128Mi) for lightweight sidecars like logging agents. Proxy sidecars require higher allocations (200-500m CPU, 256-512Mi memory). Use separate resource quotas for main and sidecar containers to prevent resource starvation and ensure predictable application performance.

Proper resource definitions prevent scheduling issues as unbounded sidecars can cause resource contention. Sidecars compete for CPU and memory with main applications, and heavy sidecars can starve applications without proper limits.

Track fundamental resource usage metrics like CPU and memory consumption to detect allocation imbalances. Observability tools providing per-container insights across clusters make performance tuning more efficient than manual approaches.

Best practices mandate explicit resource definitions in pod manifests. Always specify both requests and limits for sidecar containers, monitor actual usage patterns, and adjust allocations based on production workload characteristics rather than theoretical requirements.

How Do Service Mesh Architectures Leverage the Sidecar Pattern?

Service meshes deploy proxy sidecars (typically Envoy) alongside application containers to manage all network communication. These proxies handle traffic routing, load balancing, security policies, and observability without application code changes. The sidecar pattern enables consistent networking capabilities across heterogeneous microservices while maintaining centralised policy management and distributed enforcement.

Service mesh implementations rely heavily on the sidecar pattern for transparent network management. Proxy sidecars are automatically injected to intercept traffic, handling routing, load balancing, and circuit breaking. Applications remain completely unaware of the mesh, enabling independent evolution of application and infrastructure concerns.

Istio exemplifies this pattern by automatically injecting Istio proxy sidecars into Kubernetes pods, providing a centralised control plane to manage all sidecars and enforce policies consistently across the service landscape.

Sidecar proxies handle load balancing, retries, timeouts, and encryption without requiring application-level implementation. Envoy proxy sidecars for mutual TLS can be reused across entire service meshes, with platform tools enabling cluster-wide deployment of consistent configurations through single changes.

What Security Considerations Apply to Sidecar Container Deployments?

Sidecar containers require careful security boundaries through pod security policies, network policies restricting inter-container communication, and least-privilege access controls. Shared network namespace increases attack surface, requiring strict container image security, secrets management through dedicated secret injection sidecars, and regular security scanning of sidecar images.

Sidecars can act as dedicated security agents within pods, intercepting network traffic to enforce security policies, handling TLS termination, or managing authentication and authorisation through token validation. This isolation enables security teams to update policies independently from application development cycles.

Even though containers share localhost, communication isn’t automatically secure – sensitive sidecars handling tokens, TLS termination, or secrets should use Unix domain sockets with restricted permissions rather than TCP ports for enhanced security boundaries.

Create dedicated ServiceAccounts for each pod with tightly-scoped RBAC roles. A sidecar monitoring log files shouldn’t need permissions to list secrets or access pod metadata. Zero-trust networking principles reduce compromised container impact, limiting lateral movement if sidecars become exploited.

Service mesh integration enhances security capabilities through mutual TLS enforcement between containers, even within pods. This additional security layer provides encryption and authentication for inter-container communication, addressing the increased attack surface created by shared network namespaces.

How Do Sidecar Patterns Impact Application Performance and Scalability?

Sidecar containers add 10-50ms latency for proxied network calls and consume 50-200MB additional memory per pod. However, they enable horizontal scaling of operational concerns independently from applications, reduce application complexity, and provide consistent performance characteristics across services through standardised infrastructure capabilities.

Every sidecar consumes resources and shares pod network, necessitating strict resource limits. Network communication includes latency overhead, which may not be acceptable for ultra-low latency requirements.

This approach allows development teams to focus on business logic whilst platform teams standardise cross-cutting concerns like logging, monitoring, and security across organisations. The result is more modular architecture where components can be developed, deployed, and scaled independently.

Monitor network I/O, latency, and error rates for traffic-handling sidecars to diagnose communication issues and optimise resource allocation. Sidecars represent integral components of application functionality – failing logging sidecars can blind operations to critical errors, whilst malfunctioning security proxies can expose services to security risks.

What Are the Migration Strategies from Traditional to Sidecar-Based Architectures?

Migrate incrementally by identifying cross-cutting concerns in existing applications, starting with non-critical services for logging and monitoring sidecars. Gradually introduce proxy sidecars for traffic management, then security sidecars for policy enforcement. Use feature flags and parallel deployment strategies to minimise risk while validating sidecar integration with existing infrastructure.

Sidecars operate at pod level and interact through shared resources, enabling functionality extension without touching source code. Roll out new sidecar versions by changing image tags without rebuilding main containers.

Platform teams can attach pre-configured operational sidecars to every pod ensuring standardised policies. Declarative GitOps-based approaches prevent configuration proliferation by defining standardised configurations as code rather than individual manual configuration.

Frequently Asked Questions

Do sidecar containers restart independently from main application containers?

No, sidecar containers share the pod lifecycle with main containers. If any container in a pod fails or restarts, Kubernetes restarts the entire pod to maintain consistency. However, you can configure restart policies and health checks to minimise unnecessary restarts.

How do sidecar containers handle secrets and configuration?

Sidecar containers access secrets through Kubernetes secrets mounted as volumes or environment variables. Many organisations use dedicated secret management sidecars (like HashiCorp Vault agents) to inject secrets dynamically and handle automatic rotation without restarting applications.

Can sidecar containers communicate directly with external services?

Yes, sidecar containers can make external API calls and network connections. However, this should be carefully managed through network policies and service mesh configurations to maintain security boundaries and observability.

What happens to sidecar containers during rolling updates?

During rolling updates, entire pods (including sidecars) are replaced following Kubernetes deployment strategies. This ensures consistency but requires planning for graceful shutdown and startup sequences to maintain service availability.

How do you troubleshoot communication issues between main and sidecar containers?

Use kubectl exec to access container shells, examine shared volumes and network interfaces, check container logs, and verify port bindings. Network debugging tools like netstat and tcpdump within containers help identify connection issues.

Are sidecar containers suitable for stateful applications?

Sidecar containers work with stateful applications but require careful consideration of data persistence, backup strategies, and StatefulSet configurations. Sidecars should typically remain stateless while supporting stateful main applications.

How do sidecar containers affect pod scheduling and node placement?

Pod scheduling considers the combined resource requirements of all containers. Sidecar containers may affect node placement and pod density. Use node affinity, taints, and tolerations to ensure appropriate scheduling for sidecar-enabled workloads.

What’s the difference between ambassador and sidecar patterns?

The ambassador pattern is a specific use case of the sidecar pattern focused on external service access and protocol translation. All ambassador containers are sidecars, but not all sidecars serve as ambassadors.

How do you monitor the health and performance of sidecar containers?

Implement health checks for sidecar containers, monitor resource consumption separately from main applications, use service mesh observability features, and set up alerts for sidecar-specific metrics like proxy error rates or log processing delays.

Can you use multiple sidecar containers in a single pod?

Yes, pods can contain multiple sidecar containers for different purposes (e.g., logging, monitoring, and proxy). However, this increases complexity and resource consumption, so carefully evaluate the trade-offs between consolidation and separation of concerns.

About SoftwareSeni: We help organisations modernise their infrastructure using cloud-native patterns like the Sidecar Pattern. Our team of experienced architects guides enterprises through container orchestration and microservices adoption.

Published: September 4, 2025 | Author: James A. Wondrasek