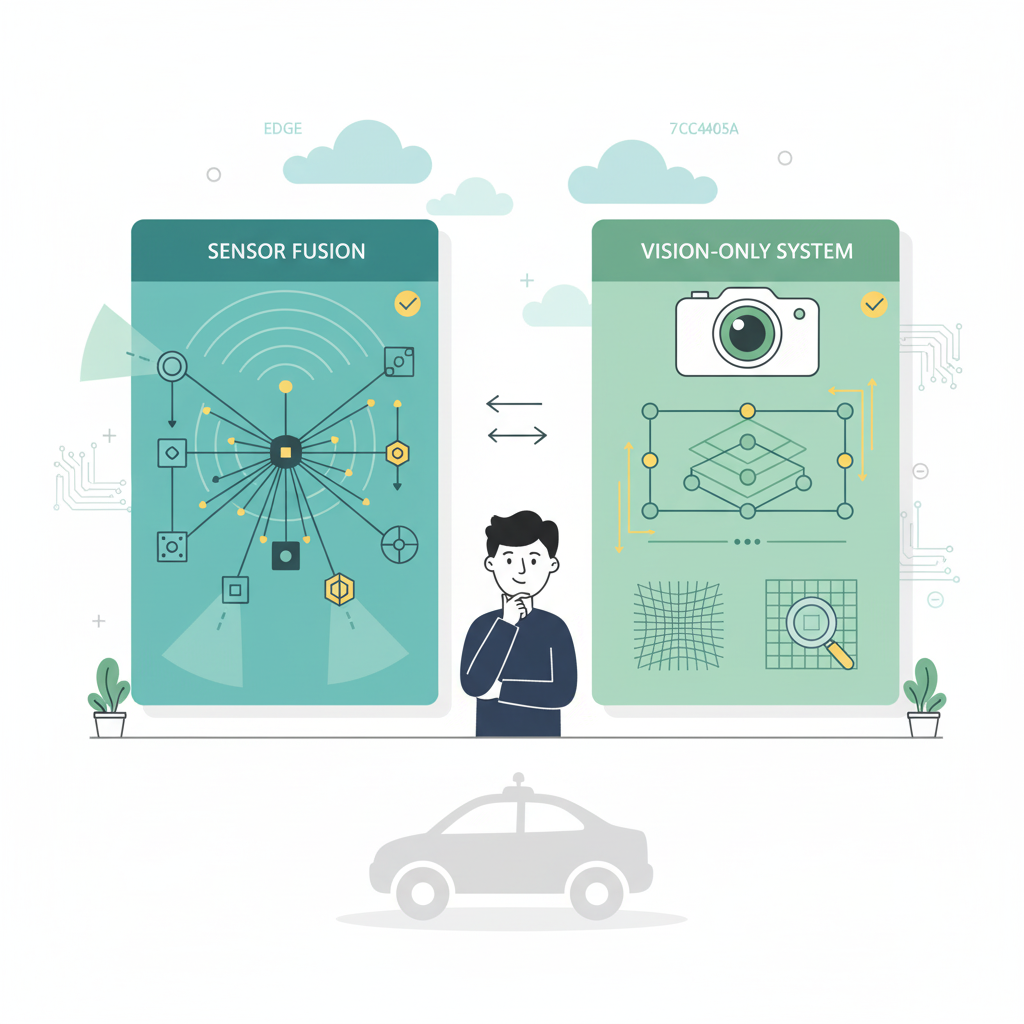

If you’re evaluating autonomous vehicle technology for fleet deployment or trying to understand where the industry is heading, you’ve got two main approaches to consider: sensor fusion and vision-only systems. For a broader view of how these technologies fit into the Australian market, see our strategic overview for technology leaders.

Sensor fusion combines LiDAR, cameras, and radar to build a redundant perception system. Vision-only relies on cameras and neural networks to do the heavy lifting. Each has trade-offs in cost, scalability, and safety.

Let’s break down how they work, who’s using what, and what it means for your deployment decisions.

What Are the Fundamental Technical Differences Between Sensor Fusion and Vision-Only Approaches?

Sensor fusion combines data from LiDAR, cameras, radar, and ultrasonic sensors to create a comprehensive environmental model. Vision-only systems use cameras exclusively, relying on neural networks to infer depth and identify objects through computer vision algorithms without direct distance measurement.

Waymo’s system uses 13 external cameras, 4 LiDAR sensors, 6 radar units, and external audio receivers for 360-degree perception. The fusion layer takes heterogeneous data and maintains state estimation across sensor types. When one sensor degrades or fails, the system continues operating on the remaining inputs.

Tesla FSD takes a different path. Eight cameras provide 360-degree visibility at up to 250 metres. They removed radar in May 2021 and rely entirely on vision. The system uses occupancy networks to infer 3D environments from 2D camera images.

Sensor fusion provides direct physical measurement of distance through active sensing. Vision-only systems infer depth computationally through neural networks. Camera-only systems scale more easily across consumer vehicles because the hardware costs less.

Now that we’ve covered what each approach does, let’s compare their accuracy.

How Do LiDAR and Camera-Based Depth Estimation Compare in Accuracy and Reliability?

LiDAR provides centimetre-level accuracy up to 200+ metres with consistent performance regardless of lighting. Camera-based depth estimation achieves 2-5% error at 50 metres, degrading to 10-15% at 100 metres, with performance dropping in low light, direct sun, and adverse weather.

Modern automotive LiDAR achieves 1-2cm accuracy at ranges up to 200+ metres using 905nm or 1550nm wavelengths. Spinning units generate 300,000-600,000 points per second; solid-state versions push past 1 million points per second. Day or night, the performance stays consistent.

Camera depth estimation is harder. Stereo setups achieve 2-5% error at 50m, but this degrades to 10-15% at 100 metres. Low-light performance drops 30-50% compared to daylight conditions.

Weather affects both approaches. LiDAR signal attenuation runs 10-30% in moderate rain, jumping to 50%+ in heavy rain or fog. Cameras struggle with lens contamination, direct sun glare, and water droplet distortion.

What Are the Key Architectural Differences Between Tesla and Waymo Approaches?

Waymo uses LiDAR, cameras, and radar with pre-mapped geofenced operation. Tesla relies on 8 cameras using end-to-end neural networks trained on fleet data for broader geographic capability without HD maps.

Waymo’s 6th generation system packs 13 cameras, 4 LiDAR units (including 360-degree long-range), and 6 radar sensors. They operate in geofenced areas with pre-built HD maps at 10cm accuracy. Over 20 million autonomous miles driven, plus 20 billion in simulation.

Tesla’s HW4 computer uses custom-designed chips with reported 300+ TOPS inference performance. Eight cameras handle perception: three forward-facing (narrow, main, wide), two side-forward, two side-rearward, and one rear. No HD maps. Real-time scene understanding only. With 4M+ vehicles collecting data, they’re training on edge cases at a scale no one else can match.

The philosophies differ. Waymo runs pre-deployment testing in controlled domains before any public operation. Tesla deploys in shadow mode on production vehicles, gathering edge cases from real-world driving at scale.

Tesla and Waymo build their own compute platforms. For everyone else, there’s Nvidia.

Where Does Nvidia Fit in the Autonomous Vehicle Technology Landscape?

If you’re not Tesla or Waymo, you’re probably buying your compute from Nvidia. They provide the computing infrastructure powering most AV development through their DRIVE platform. For deeper analysis of these companies and their partnership strategies, see our guide on autonomous vehicle companies and strategic partnership models.

DRIVE Orin delivers 254 TOPS with 8 ARM Cortex-A78AE cores and an Ampere GPU. It’s been shipping since 2022. DRIVE Thor steps up to 2000 TOPS, targeting Level 4 autonomy with production expected 2025-2026.

The DRIVE Hyperion 9 reference architecture specifies 12 cameras, 9 radar units, 3 LiDAR sensors, and 12 ultrasonics. Mercedes-Benz, Volvo/Polestar, Jaguar Land Rover, BYD, and Lucid all use Nvidia. Chinese AV companies like WeRide, Pony.ai, and AutoX run predominantly on Nvidia hardware.

The software stack includes DRIVE OS (a safety-certified real-time operating system ready for ASIL-D compliance) and DRIVE Sim, an Omniverse-based simulation platform for virtual testing.

Why Is Edge Computing So Important for Autonomous Vehicle Safety and Performance?

Edge computing enables sub-100 millisecond response times needed for safe autonomous operation. At 60 mph, a vehicle travels 88 feet per second, making cloud latency unacceptable for safety decisions. On-vehicle processing handles 1-2 terabytes of sensor data per hour locally.

Human reaction time runs 200-300ms. Autonomous systems need to do better. Safety decisions require sub-100ms latency. At 60 mph (88 ft/s), 100ms of latency means 8.8 feet of travel before any response. Advanced systems now achieve end-to-end latency of 2.8-4.1ms from sensor input to actuator output.

Level 4 systems need 200-500 TOPS for real-time inference. A sensor fusion suite generates 2-4 TB of raw data per hour.

Power consumption is a real constraint. Sensor fusion systems draw 500-2000W for compute platforms plus sensors. That’s a lot of heat to dissipate in an enclosed vehicle. Vision-only systems run cooler at 100-300W. For EVs, sensor fusion can reduce range by 1-5% depending on configuration. Vision-only systems affect range by 1-2%.

The split is straightforward. Real-time perception, planning, and control run 100% on-vehicle. Map updates, fleet learning, and analytics can handle cloud latency. You can’t send a terabyte per hour to the cloud and wait for decisions to come back. The physics don’t work.

What Do SAE Level 4 and Level 5 Autonomy Mean for Sensor Architecture Requirements?

Understanding these levels matters because they directly impact what sensor architecture you need for regulatory approval.

Level 4 requires autonomy within defined operational design domains. No human fallback required within the ODD. Level 5 handles all driving tasks in all conditions with no restrictions.

The SAE definitions are clear. Level 4 means the vehicle handles all driving tasks within a specific operational design domain (ODD). Level 5 handles all driving tasks everywhere.

Waymo’s Level 4 ODD covers clear weather, mapped urban areas, typically at speed limits under 45 mph. Level 5 would require handling all weather, all road types, all countries, and unexpected scenarios.

Where are we today? Level 4 is achieved: Waymo operates in Phoenix and San Francisco, Cruise is paused, AutoX runs in China. For more on robotaxi operations and commercial viability, see our analysis of robotaxis, warehouse automation and autonomous delivery. Level 5? No company has demonstrated true Level 5 capability as of 2024. Industry consensus has shifted the timeline from 2025 predictions to 2030-2035 or later.

Sensor fusion dominates Level 4 deployments for a simple reason: regulators accept the redundancy argument. If your LiDAR fails, cameras and radar keep working. If cameras are blinded by sun glare, LiDAR still sees. Vision-only systems don’t have this fallback, which makes regulatory approval harder.

The difference between approaches becomes clearer when you look at the neural network architectures.

How Do Neural Network Architectures Differ Between the Two Approaches?

Sensor fusion typically uses modular pipelines with separate networks for each sensor and a fusion layer. Vision-only systems increasingly adopt end-to-end transformers that process raw camera data directly to driving outputs, with BEV representations becoming standard for unified scene understanding.

Waymo runs separate networks for object detection, tracking, prediction, and planning. A fusion layer combines outputs using attention mechanisms or late fusion techniques.

Tesla takes the end-to-end approach. A single network processes camera pixels and outputs driving decisions. Recent BEV transformer architectures show improved performance over earlier multi-stage approaches.

BEV transformers convert multi-view camera images to a birds-eye-view representation. This enables unified 3D perception without explicit depth estimation. The catch? Training requires massive datasets. Production systems need 1B+ labelled frames.

Modular pipelines are easier to debug. When something breaks, you know which module failed. End-to-end systems are harder to interpret but potentially more capable once trained.

What Are the Total Cost Implications for Enterprise Deployment of Each Approach?

Sensor fusion vehicles cost $150,000-200,000 in hardware. Vision-only hardware costs under $2,000 per vehicle. However, total cost of ownership must include fleet operations, mapping, validation, and regulatory compliance where costs converge. For a detailed framework on calculating ROI and assessing organisational readiness, see our implementation framework guide.

Hardware costs have dropped fast. Premium LiDAR like Luminar Iris runs $500-1,000 per unit at scale. Budget Chinese suppliers sell for $150-200. In 2015, LiDAR cost $75,000 per unit. Camera modules run $50-200 each. Tesla’s entire camera suite costs an estimated $500-1,500.

Total system costs diverge more. Waymo robotaxi hardware runs an estimated $150,000-200,000 per vehicle. Tesla FSD-equipped vehicles cost $8,000 for the FSD option plus standard hardware.

Operational costs matter too. HD mapping costs $0.10-0.50 per mile to create and maintain. Fleet operations run $50,000-100,000 per vehicle per year for robotaxi service. Insurance costs more for Level 4 commercial operations.

Waymo spent 15+ years and $5.7B+ to achieve Level 4 deployment. New entrants should expect 5-10 years minimum to commercial deployment.

Frequently Asked Questions

Can autonomous cars drive safely in rain and snow? Sensor fusion systems with LiDAR perform better in rain and snow than vision-only. LiDAR maintains 70-90% accuracy in moderate rain while cameras drop 30-50%. Heavy fog challenges both, though sensor fusion provides a marginal advantage.

Why doesn’t Tesla use LiDAR like Waymo? Tesla argues humans drive with vision alone, so machines can too. This enables lower hardware costs and fleet-wide data collection but requires solving harder computer vision problems.

Which approach has better safety data? Waymo publishes detailed safety reports showing 0.21 contact events per million miles, 84% fewer than the human benchmark. Tesla FSD safety data is less transparent, with 58 incidents under NHTSA investigation as of 2024, including 13 fatal.

How much computing power do autonomous vehicles need? Level 4 systems require 200-500 TOPS minimum. See the edge computing section above for details on current platforms.

What happens if sensors fail during operation? Sensor fusion provides redundancy, allowing continued safe operation if one sensor type fails. Vision-only systems must handle failures through software, typically initiating safe stops.

How do maintenance costs compare? LiDAR sensors require periodic calibration and have finite lifespans of 3-5 years for spinning units. Camera-based systems have lower maintenance requirements but may need frequent software updates.

What role do HD maps play in each approach? Sensor fusion systems like Waymo rely on pre-built HD maps at 10cm accuracy for localisation. Vision-only systems like Tesla aim to operate without pre-mapping, using real-time scene understanding and GPS for navigation.

Which companies use sensor fusion versus vision-only? Sensor fusion: Waymo, Cruise, Aurora, Motional, Zoox. Vision-only: Tesla. Hybrid approaches: Mobileye uses camera-first with radar/LiDAR validation.

How do both approaches handle construction zones? Both struggle with construction zones due to changed layouts and temporary signage. Sensor fusion handles physical obstacles better. Vision-only may miss unmarked hazards.

How does power consumption compare between approaches? Sensor fusion systems consume 500-2000W for sensors and compute. Vision-only systems typically use 100-300W. This affects EV range by 1-5% for sensor fusion, 1-2% for vision-only.

When will true Level 5 autonomy arrive? Industry consensus has shifted from 2025 predictions to 2030-2035 or later. Neither approach has demonstrated Level 5 capability in uncontrolled environments.

What are the biggest unsolved technical challenges? Both approaches struggle with rare edge cases, adverse weather, and scenarios not well represented in training data. Sensor fusion faces cost scaling challenges. Vision-only faces depth accuracy and low-light performance challenges.