Spec-driven development looks great in demos. Create a specification, feed it to an AI, get working code. The productivity gains are obvious.

Then you try to integrate it into your existing CI/CD pipeline and discover the problem. The tools work fine by themselves, but getting them to work with GitHub Actions or Jenkins turns into an exercise in duct tape and workarounds.

Your team won’t adopt something that slows them down, no matter how elegant the technology behind it is. This article, part of our comprehensive spec-driven development guide, gives you the platform-specific integration patterns, automation mechanisms, and lifecycle management strategies you need to make spec-driven development work in production environments.

The outcome you’re after: automated specification validation that catches issues early, reduced drift between specs and implementations, and measurable productivity gains that justify the investment to whoever holds the budget.

Why is workflow integration the make-or-break factor for spec-driven development?

Most spec-driven development initiatives fail at workflow integration, not at technology evaluation. You run a successful proof-of-concept, the team gets excited about the possibilities, and then the project dies when nobody can figure out how to integrate specification validation into the existing pipeline without doubling build times.

I’m sure you’ve been there: prompt, prompt, prompt, and you have a working application. But getting it to production requires more than vibes and prompts. It needs workflow integration.

The “works in isolation” trap catches everyone. Specification validation adds pipeline stages. Code generation increases execution time. Quality gates introduce new failure modes. If you’re not careful, you’ve added three minutes to every build and your developers are quietly reverting to manual coding because it’s actually faster than waiting for the pipeline to run.

Developer experience matters more than tooling elegance. About 80% of microservice failures can be traced back to inter-service calls, which shows how workflow complexity creates operational problems. Your spec-driven development integration needs to reduce this complexity, not add to it.

The integration requirement is simple: spec-driven development must feel like a natural evolution of how your team already works. Not a disruptive transformation that requires re-learning everything from scratch.

Your success criterion is straightforward. Teams should adopt spec-driven practices because they improve workflow velocity and code quality. Not despite the workflow friction you’ve introduced.

What are the core spec-driven workflows to understand?

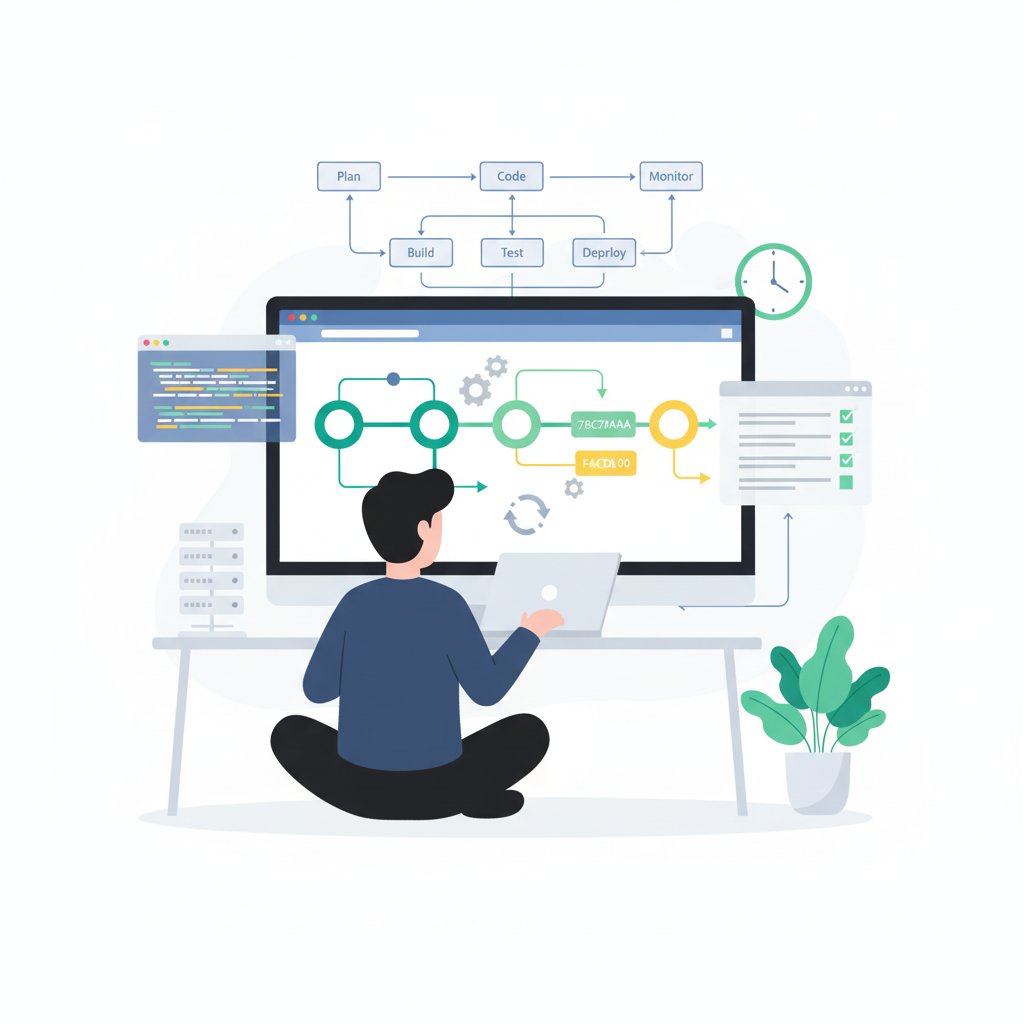

Two major workflow models dominate spec-driven development: GitHub’s 4-phase workflow and AWS Kiro’s 3-phase approach. Understanding both helps you choose the right model for your team’s needs.

GitHub’s 4-phase workflow breaks things into discrete stages. Phase 1 is Specify—create specifications as your source of truth. Phase 2 is Plan—AI breaks specifications into implementation tasks. Phase 3 is Tasks—structured task lists with acceptance criteria. Phase 4 is Implement—AI generates code from specifications and tasks.

Each phase transition provides an automation opportunity. After Specify, trigger validation checks. After Plan, run feasibility analysis. After Tasks, assign work. After Implement, run tests. The GitHub Spec Kit provides tooling that implements this workflow if you’re already in the GitHub ecosystem.

AWS Kiro’s 3-phase workflow simplifies the model a bit. Phase 1 is Specification—create and validate specs. Phase 2 is Planning—AI generates an implementation plan. Phase 3 is Execution—code generation with agent hooks for automation.

The difference is in the automation model. Kiro contains concepts like ‘steering’ (essentially rules that agents will follow before responding) and ‘agent hooks’ (things you can offload from the spec to help keep the context window from getting too large). These agent hooks are file system watchers that continuously monitor specification changes and trigger workflows automatically—quite clever.

Choosing your workflow depends on context. Smaller teams benefit from Kiro’s simpler model. Larger teams with complex specifications prefer GitHub’s more granular phases that support detailed review processes. Your existing CI/CD platform matters too. If you’re already on GitHub Actions, the Spec Kit integration is straightforward. If you’re AWS-centric, Kiro’s native integration with AWS services makes more sense.

Common patterns emerge across both approaches. Specifications come first, always. AI assists with task breakdown. Automated validation gates catch specification issues before they become code issues. The workflow captures context in living documents, not just in the ephemerality of prompts alone. This matters more than most people realise when you’re six months into a project.

How do you integrate spec-driven development with CI/CD pipelines?

Integration starts with treating specifications as first-class artifacts in your pipeline alongside code. Not documentation that lives in a wiki somewhere. Actual artifacts that flow through your pipeline with their own validation, versioning, and deployment stages.

Your pipeline needs six new stages. First, specification validation runs syntax checking, semantic validation, and standards compliance. Second, code generation triggers automatically when specifications are committed or merged. Third, generated code validation ensures the output meets your quality standards using automated quality gates. Fourth, testing integration runs automated tests that validate specification-to-implementation alignment. Fifth, quality gates provide checkpoints that prevent non-compliant specifications from progressing. Sixth, deployment automation uses specifications to drive deployment configurations.

Automated specification validation catches issues early. Lint checks verify format compliance. Semantic validation ensures specifications are complete and consistent. Integrate static analysis tools into the CI/CD pipeline to flag issues early and enforce consistency. The earlier you catch problems, the cheaper they are to fix.

Code generation triggers need careful orchestration. Merge request triggers work for most teams—when a specification is approved and merged, generation runs automatically. Scheduled generation suits teams with complex specifications that benefit from batched processing. On-demand execution gives developers control for rapid iteration during active development.

Caching strategies maintain pipeline performance. Cache validation results for unchanged specifications—if a specification hasn’t changed since the last successful validation, skip re-validation. Cache generated code when specifications are stable. Cache dependency downloads between pipeline runs. These patterns reduce typical validation overhead from 30 seconds to under 10 seconds for unchanged specifications, which matters when your team is running dozens of builds per day.

Error handling needs clear procedures. When specification validation fails, provide actionable feedback about what’s wrong and how to fix it. When code generation fails, preserve the specification and flag it for manual review—don’t let it silently disappear into failed build logs. When generated code fails tests, determine whether the specification or the generation process needs adjustment.

How do you implement spec-driven integration on different CI/CD platforms?

Platform selection impacts integration patterns more than you’d expect. Each platform has its own quirks. When evaluating CI/CD compatibility for spec-driven workflows, consider these platform-specific patterns.

GitHub Actions offers native Spec Kit integration. The marketplace provides actions for specification validation like Spectral for OpenAPI linting. Configure workflows that trigger on pull requests, run specification linting in parallel with code linting, and post validation results as PR comments so developers get immediate feedback.

GitLab CI works similarly. Your .gitlab-ci.yml defines stages for spec-driven workflows. Define a validate-specs job in an early pipeline stage, configure it to run in parallel with unit tests, and create a generate-code stage that runs after validation passes. Pretty straightforward if you’re already comfortable with GitLab pipelines.

Jenkins takes a plugin-based approach. Jenkins usually relies on webhooks in the SCM or cron jobs in the Jenkinsfile for triggering. Install Pipeline Utility Steps for file manipulation and HTTP Request plugin for triggering external generation tools. It’s more manual configuration than modern platforms but it works.

AWS CodePipeline integrates naturally with Kiro for AWS-centric teams. Kiro agent hooks integrate with CodePipeline stages through Lambda function triggers. Configure with source, build, test, and deploy stages with manual approval gates where you need human oversight.

Common patterns work across all platforms. Run specification validation as early as possible—fail fast by running tests early. Keep pipelines short, ideally under 10-15 minutes, using caching and parallelisation. Developers won’t wait around for 30-minute builds.

How do hooks and automation enhance spec-driven workflows?

Hooks turn your spec-driven workflow from manual to automatic. That’s where the productivity gains really show up.

Pre-generation hooks run before code generation starts. They check specification completeness and verify prerequisites like required dependencies or environment variables. Use pre-commit hooks for early local validation. Install Husky for git hook management, configure it to run Spectral CLI for OpenAPI validation. The goal is catching issues in seconds locally rather than minutes in CI—developers appreciate fast feedback.

Post-generation hooks execute tests against generated code, run static analysis, and update documentation. Configure pytest or jest to run automatically, integrate SonarQube for quality analysis, and trigger documentation generators when specifications change. Implementing automated testing strategies ensures your docs stay in sync with reality and catches issues before they reach production.

Validation hooks enforce production readiness through security scanning (OWASP ZAP for common vulnerabilities), performance testing against SLAs, and compliance checks for regulatory requirements. These run as pre-deployment gates implementing our production validation automation—if validation fails, deployment is blocked. Nobody wants to discover security issues in production.

Deployment hooks deploy specifications to API gateways, update service mesh configurations, and configure monitoring for new endpoints. Blue-green deployments provide instant rollback if issues appear. This end-to-end automation is what makes spec-driven development faster than manual processes.

Agent hooks take a different approach. Agent hooks are file system watchers that trigger workflows on specification changes. They monitor continuously rather than only at git events and integrate with monitoring systems for richer context. Git hooks execute at specific events—commit, push, merge. Agent hooks watch constantly and can trigger complex workflows based on what changed. Users can create an agent hook that runs a unit test every time you commit an update, providing continuous validation without explicit triggers.

Hook best practices: fail fast with clear error messages, provide clear feedback about what went wrong and how to fix it, maintain idempotency so hooks can be safely re-run, and avoid long-running operations in pre-commit hooks because developers won’t wait.

How do you manage specification lifecycle and maintenance?

Without lifecycle management, you end up with specifications that don’t match reality. We’ve all seen the API documentation that describes endpoints that haven’t existed for two years.

Feature branches contain specification changes alongside code changes. Main branch remains the stable source of truth. Specification reviews happen before merging, with dedicated reviewers who have API design expertise—not just code reviewers who might miss design issues. Semantic versioning for APIs handles deprecations gracefully and communicates change impact clearly.

Drift detection prevents specifications from diverging from reality. Specification-code synchronisation tools compare specifications to implemented APIs and flag differences. 47% of development teams struggle with backward compatibility during updates, making drift detection particularly valuable. You can’t fix what you don’t know is broken.

When drift is detected, determine whether the specification or implementation is correct. Someone made an undocumented change somewhere. Update the specification to match reality or regenerate code from the specification depending on which represents the intended behaviour.

Synchronisation strategies maintain alignment over time. Automated regeneration triggers run when specifications change—the specification is the source of truth, code follows. Update workflows define who can propose specification changes and what testing validates changes before they’re accepted. Proper specification lifecycle management ensures versioning and maintenance processes prevent drift over time.

Version control and easy roll-back for all code, configurations, and scripts monitors changes and provides safety nets when things go wrong. Validation gates detect breaking changes automatically before they reach consumers. API versioning strategies—URL versioning like /v1/users or header-based versioning—allow multiple specification versions to coexist during transitions.

What Git workflows work best with spec-driven development?

Your feature branch starts with specification changes before any code is written. This is the key mindset shift. Specification changes get committed first, triggering validation and generation workflows that produce the code you’ll then review and refine.

Specification review requires different expertise than code review. Reviewers need API design knowledge (RESTful principles, GraphQL best practices), domain understanding of the business problem being solved, and security awareness around authentication and authorisation patterns.

Review checklists structure reviews consistently: check completeness of all endpoints and data models, verify clarity of descriptions and examples, confirm API design principles are followed, validate backward compatibility with existing versions.

Repository structure matters for workflow efficiency. Monorepo patterns store specifications alongside code in /specs directories within each service repository—simplified CI/CD because everything is in one place, atomic commits that keep specs and code in sync, reduced synchronisation complexity. Trade-offs: repository size growth over time and tighter coupling between specifications and implementations.

Separate specification repositories create clearer boundaries. Your organisation maintains an api-specifications repository that multiple service repositories reference. This provides independent versioning of specifications and reusability across multiple implementations. Trade-offs: synchronisation challenges when specifications change and cross-repository dependencies that complicate builds.

CODEOWNERS file integration automates reviewer assignment. Map /specs/auth/** to your security team, /specs/payments/** to your payments team. GitHub or GitLab automatically adds the right reviewers when specifications change.

How do you handle specification conflicts in large projects?

Multi-team environments create conflict types beyond standard merge conflicts in git.

Overlapping requirements: Team A creates /users as a REST endpoint. Team B creates GraphQL query users for the same data. Both valid in isolation, but inconsistent together and confusing for API consumers. Detect through automated scanning for duplicate endpoint paths and resource names. Resolution needs governance—an architecture review board evaluates conflicts and makes binding decisions about which approach to use.

Inconsistent specifications: User entity has different required fields across specifications. Authentication mechanisms vary between services. Error responses differ in format and content. Detection uses cross-specification validation tools that check consistency. Establish organisation-wide design standards, shared data models in a common schema repository, and specification linting rules that enforce consistency.

Dependency conflicts: Specification A depends on types defined in Specification B. Changes to B break A without anyone being aware until builds fail. Maintain a dependency graph showing which specifications reference which. Run impact analysis before approving changes. Build automation that can track and manage versions, decreasing potential integration conflicts.

Prevention beats resolution every time. Define clear ownership boundaries for different parts of your API surface. Communicate early when planning changes that might affect other teams. Maintain design consistency through shared standards and tooling. Schedule regular synchronisation meetings for teams working on related specifications.

What metrics should you track for spec-driven development?

Code generation success rate: successful generations divided by total attempts. Target 85%+ at maturity when processes are well-established, 75%+ during expansion to new teams, 60%+ during initial pilot phase.

Specification quality metrics: completeness score based on required fields and documentation, clarity rating from peer reviews, standards compliance percentage from automated linting.

Production incident rate compares AI-generated code to manually written code. Organisations with robust quality metrics achieve 37% higher customer satisfaction, which translates to business value and customer retention.

Time to production measures efficiency gains from specification commit to deployed code running in production. This is your headline metric for justifying the investment in spec-driven development.

Developer productivity metrics include deployment frequency, lead time for changes, change failure rate, and time to restore service. These DORA metrics provide standardised benchmarks you can compare against industry averages.

Code quality trends: bug density in generated code, technical debt accumulation over time, code review time for generated versus manual code.

Specification drift rate: drift incidents per month where specs and reality diverged, time to detect drift, time to resolve once detected.

Validation failure rate: track by validation type—syntax errors, semantic issues, breaking changes. This shows where specification quality needs improvement.

Create transparent, shared KPI dashboards that make performance visible to everyone. Measure your current state before adoption to establish a baseline. Begin by selecting one to five key metrics that directly support your current priorities, don’t try to track everything at once.

What are the best practices for workflow integration?

Teams that adopt incrementally succeed. Teams that try overnight transformation fail. That pattern holds across almost every major technology shift.

Start small. Choose a single team, single project, limited scope. Run a pilot that proves value with real work. Gather feedback from developers actually using the process. Refine based on what you learn. Then expand to additional teams.

Avoid big bang transformation where everything changes at once. This creates too much disruption and you lose the ability to identify what’s working and what’s not.

Run old and new workflows side-by-side initially. Some features developed traditionally, others spec-driven. This enables gradual training of your team and provides a fallback if the new approach isn’t working for a particular use case. Managing a hybrid environment increases system complexity temporarily, which is the cost of safe transformation. Set clear criteria for when parallel workflows end—specific dates or coverage thresholds.

Rollback strategies provide safety nets when things go wrong. Feature flags enable or disable generation without redeployment. Manual overrides bypass automation when needed for urgent fixes. Emergency procedures handle production incidents without requiring the new workflow.

Schedule regular retrospectives to evaluate what’s working and what needs adjustment. Use metrics to drive optimisation decisions. Implement feedback loops so developers can suggest improvements and see them implemented.

Documentation accelerates adoption: workflow guides showing step-by-step processes, runbooks for common issues, example specifications demonstrating best practices, training materials for new team members.

Team training makes adoption smoother: hands-on workshops with real specifications, pair programming between experienced and new users, office hours for questions and troubleshooting, champion networks of early adopters who can help others.

Requires strong discipline—teams must stay focused and systematic; otherwise, migration can stall indefinitely. Assign clear ownership for the transformation initiative. Track milestones and communicate progress. Celebrate wins to maintain momentum.

What does an end-to-end spec-driven workflow look like?

Eight stages from authoring to production, with automation at every stage except code review (which needs human judgment).

Stage 1: Specification authoring. Developers write specs using IDE extensions that provide validation and auto-completion. Tools include VS Code with OpenAPI extensions or Stoplight Studio for visual specification design.

Stage 2: Specification validation via pre-commit hooks. Spectral validates OpenAPI specifications against your organisation’s standards. Custom validators check project-specific requirements. Failed validation blocks commits with clear error messages.

Stage 3: Code generation when specifications reach main branch. GitHub Copilot, AWS Kiro, or custom scripts produce implementation code following your architectural patterns. Generated code is validated through automated pipelines before developers see it.

Stage 4: Automated testing. Integration tests verify interactions between services. Unit tests validate business logic. Contract tests ensure services interact as expected based on specifications.

Stage 5: Human code review. Reviewers evaluate specification quality and check generated code implements specifications correctly. They look for edge cases the AI might have missed and ensure the code matches your team’s patterns.

Stage 6: Quality gates. SonarQube performs static analysis for code quality issues. OWASP ZAP runs security scanning for common vulnerabilities. Performance testing validates SLAs are met. Failed gates block deployment automatically.

Stage 7: Automated deployment. Deploy to staging first for final validation. Approval gates before production give stakeholders visibility. Blue-green deployments or canary releases provide safe rollout with instant rollback if issues appear.

Stage 8: Ongoing monitoring. Drift detection runs continuously comparing specifications to deployed APIs. When drift is detected, feedback loops trigger specification updates or regeneration depending on which side should change.

State transitions make progress measurable so everyone knows where work stands. Developers see exactly where specifications are in the workflow. Failures are contained and explainable with clear error messages rather than mysterious pipeline failures.

Total time from specification commit to production: 30-60 minutes of automated pipeline time plus whatever code review time your team needs.

For a complete overview of spec-driven development including tool selection, specification writing, and team adoption strategies, see our complete guide to spec-driven development.

FAQ Section

How long does it take to integrate spec-driven development into existing CI/CD pipelines?

Integration timeline depends on pipeline complexity and team size. Simple pipelines with basic validation take 1-2 weeks for initial setup. Complex multi-stage pipelines with custom tooling require 4-8 weeks for comprehensive integration. Plan for gradual rollout over 2-3 months to refine processes based on feedback and adjust to what works for your team.

Can I use spec-driven development with legacy CI/CD tools like Jenkins?

Yes, spec-driven development works with legacy tools through plugin-based integration or custom scripts. Jenkins supports specification validation via Pipeline-as-Code, shell script execution for validation tools, and HTTP Request plugins for triggering external generation tools. The integration requires more manual configuration than modern platforms but is fully viable—plenty of organisations are doing this successfully.

What’s the minimum viable integration for starting with spec-driven development?

Start with specification validation in pre-commit hooks and pull request checks. This catches issues early without requiring full pipeline integration. Use linting tools like Spectral for OpenAPI validation. Once comfortable, add code generation triggers on merge to main branch. Expand from there based on team needs rather than trying to implement everything at once.

How do I convince my team to adopt additional workflow steps for specifications?

Demonstrate value through metrics showing reduced bugs from clear specifications, faster onboarding with self-documenting APIs, and decreased time in code review when specifications are pre-approved. Start with a pilot project showing concrete productivity gains rather than theoretical benefits. Make workflow changes incremental, not disruptive—if it feels like extra work rather than making work easier, you haven’t integrated it properly yet.

What happens when the CI/CD pipeline fails specification validation?

Failed validation blocks the pipeline, preventing non-compliant specifications from progressing to code generation. Developer receives feedback on validation errors—syntax issues, missing required fields, breaking changes. Developer fixes specification locally, re-commits, and validation runs again. Quality gates prevent bad specifications from reaching production. This is exactly what you want—catching issues early when they’re cheap to fix.

Should I store specifications in the same repository as code or separate repositories?

Monorepo approach (specifications with code) simplifies CI/CD integration, enables atomic commits that keep specs and code in sync, and maintains single source of truth. Separate repositories provide independent versioning, support multiple implementations per specification, and offer clearer ownership boundaries. Choose based on team structure: single team per service favours monorepo, multiple teams sharing specifications favour separate repos.

How do I handle specification changes that break backward compatibility?

Use semantic versioning for specifications with major version increments for breaking changes—this signals to consumers that they need to update their integrations. Implement validation gates that detect breaking changes and require explicit approval from architecture review. Maintain multiple specification versions simultaneously during deprecation periods so existing consumers aren’t forced to migrate immediately. Notify consumers well before making breaking changes, ideally 3-6 months ahead.

What’s the performance impact of adding specification validation to CI/CD pipelines?

Initial validation adds 10-30 seconds to pipeline execution for typical OpenAPI specifications. Caching reduces subsequent runs to 5-10 seconds for unchanged specifications. Parallelise validation with other pipeline stages where possible to avoid extending total pipeline time. Performance impact is minimal compared to value of catching specification issues early—a 10-second validation that catches an issue saves hours of debugging later.

Can I use multiple specification formats (OpenAPI, AsyncAPI, GraphQL) in the same pipeline?

Yes, modern CI/CD pipelines support multiple specification formats through different validation tools. Configure separate validation jobs for each format using appropriate linters—Spectral for OpenAPI, AsyncAPI Validator for AsyncAPI, GraphQL Inspector for GraphQL schemas. Maintain consistent quality standards across formats through unified linting rules and quality gates. Most teams end up with multiple formats depending on what they’re building.

How do I migrate an existing project to spec-driven workflows without disrupting current development?

Use parallel workflows: maintain existing manual development while adding spec-driven option for new features. Start with non-critical components where mistakes are cheap. Gradually expand spec-driven coverage as team gains confidence and processes mature. Avoid forcing complete migration simultaneously—that’s a recipe for resistance and failure. Plan a 3-6 month transition period with clear milestones and celebrate progress along the way.

What skills does my team need to successfully integrate spec-driven development?

Required skills include API design principles (REST, GraphQL, async messaging), specification format expertise (OpenAPI, AsyncAPI, GraphQL), CI/CD pipeline configuration for your specific platform, and version control workflows beyond basic git operations. Helpful skills include automation scripting for custom hooks, prompt engineering for AI code generation tools, and observability and monitoring for production systems. Most teams can learn these skills incrementally through documentation, training, and hands-on practice over 1-2 months—you don’t need to hire all new people.

How do agent hooks differ from traditional git hooks in spec-driven workflows?

Agent hooks are file system watchers that continuously monitor specification changes and trigger automation, while git hooks execute only at specific git events like commit or push. Agent hooks provide richer context about which files changed and what changed in them, integrate with monitoring systems for observability, and support more complex automation workflows based on content changes. Git hooks are simpler, require no additional infrastructure, but have limited scope—they only know a git event happened, not what the change means.