Container orchestration has become essential for modern applications, yet many organizations struggle with deployment complexity and downtime during releases. This comprehensive guide is part of our complete DevOps automation and CI/CD pipeline guide, exploring how to choose the right container orchestration platform, implement zero-downtime deployment strategies, and establish GitOps workflows that scale with your organization. You’ll learn practical implementation steps for blue-green and canary deployments, how to choose between Kubernetes and Docker Swarm based on your specific needs, and how to set up GitOps with Argo CD or Flux CD. Whether you’re migrating from traditional deployments or scaling existing containerized applications, this guide provides the decision frameworks and step-by-step implementations needed to build production-ready container orchestration that reduces risk while improving deployment velocity.

Why Container Orchestration Matters for Modern Applications?

Container orchestration automates the deployment, scaling, and management of containerized applications across multiple hosts, eliminating manual configuration errors and enabling consistent environments from development to production. Modern applications require orchestration to handle service discovery, load balancing, health monitoring, and automatic scaling that manual container management cannot efficiently provide at scale.

The shift from monolithic architectures to microservices has created a fundamental need for orchestration capabilities. A single application might now consist of dozens of containers, each requiring coordination with others through service discovery mechanisms. When one container fails and restarts, the system must automatically update load balancer configurations and health check routing without manual intervention.

Cloud-native infrastructure demands visibility because “what you can’t see in the runtime layer can’t be fixed”. A failing container may restart on its own, but if the root cause is architectural, it may reappear moments later, somewhere else. This reality makes observability not an add-on but a fundamental requirement for container orchestration success.

The business benefits extend beyond technical convenience. Organizations using container orchestration report reduced downtime, faster deployments, and significant cost optimization through better resource utilization. The operational consistency achieved through orchestration enables teams to focus on feature development rather than infrastructure management.

Complete Kubernetes infrastructure needs proper DNS, load balancing, Ingress and RBAC, alongside additional components that make deployment daunting for IT teams. However, this complexity serves a purpose—enabling zero-downtime deployments, auto-scaling clusters, and cross-region failovers that transform from dreams into design choices. When combined with proper infrastructure as code implementation, container orchestration provides the foundation for truly automated, scalable application delivery.

How to Choose Between Kubernetes and Docker Swarm?

Choose Kubernetes for enterprise-scale applications requiring advanced features, multi-cloud support, and extensive ecosystem integrations, while Docker Swarm works best for smaller teams needing simple container orchestration with minimal operational overhead. The decision depends on your team size, scalability requirements, operational complexity tolerance, and existing infrastructure investments.

Docker Swarm offers compelling advantages for smaller deployments. Simple deployment requires just a single command: docker swarm init, making it lightweight and easy to set up with seamless Docker CLI integration. The built-in load balancing and easier learning curve make it ideal for small to medium-scale projects where operational simplicity outweighs advanced feature requirements.

However, Docker Swarm’s limitations become apparent at scale. Limited scalability, fewer advanced networking features, smaller community, and basic security controls restrict its enterprise applicability. Teams using Docker Swarm often require third-party tools for monitoring and face less flexible deployment options compared to Kubernetes alternatives.

Kubernetes provides enterprise-grade capabilities that justify its complexity. Advanced load balancing and networking, robust community support, extensive third-party integrations, and strong security with RBAC create a comprehensive platform for complex applications. Multi-cloud and hybrid-cloud support, advanced resource management, and built-in monitoring capabilities position Kubernetes as the clear choice for organizations expecting growth.

The decision matrix becomes clearer when considering team capabilities and application requirements. Teams with fewer than 10 developers managing simple applications benefit from Docker Swarm’s simplicity. Organizations planning multi-cloud deployments, complex networking requirements, or expecting significant scale should invest in Kubernetes despite its steeper learning curve and configuration complexity.

Kubernetes has essentially “won over Docker Swarm” due to its comprehensive capabilities and strong ecosystem support, making it the de facto standard for container orchestration in enterprise environments.

How to Implement Blue-Green Deployments?

Blue-green deployment creates two identical production environments where traffic switches instantly between the current version (blue) and new version (green) after validation, enabling zero-downtime releases with immediate rollback capabilities. Implementation requires duplicate infrastructure resources but provides the safest deployment strategy for mission-critical applications.

The blue-green architecture starts with establishing two identical production environments. The blue environment serves live traffic while the green environment remains idle, ready for deployment. New versions deploy to the Green environment, where validation and testing occur before seamlessly switching traffic from Blue to Green through load balancer configuration changes.

Traffic switching mechanisms vary based on infrastructure choices. Load balancers provide the most common approach, updating backend pool configurations to redirect requests from blue to green environments. Service mesh implementations like Istio offer more granular traffic control with additional observability features. DNS-based switching works for applications tolerating propagation delays but provides less immediate control.

Validation processes become essential before traffic switching. Automated health checks verify application functionality, database connectivity, and external service integration. Smoke tests validate critical user paths while performance benchmarks ensure the new version maintains acceptable response times. Only after passing all validation gates should traffic switching commence.

The rollback procedure provides blue-green deployment’s greatest advantage. When issues arise, switching traffic back to the blue environment provides instant rollback capabilities without deployment delays or service interruptions. This safety net makes blue-green deployment particularly valuable for customer-facing services requiring high availability.

Infrastructure cost considerations require careful evaluation. Running duplicate environments doubles resource requirements, making blue-green deployment more expensive than alternatives like rolling updates. However, for mission-critical applications and customer-facing services requiring high availability, the cost justification becomes clear through reduced downtime and business impact protection.

Tools like Devtron, Argo Rollouts, and Flagger provide implementation frameworks that automate traffic switching and rollback procedures, reducing operational complexity while maintaining deployment safety.

How to Set Up Canary Deployments with Progressive Rollout?

Canary deployment gradually routes increasing percentages of traffic to new application versions, starting with 1-5% of users and progressively expanding based on success metrics and monitoring data. This approach minimizes risk by limiting exposure to potential issues while providing real-world validation under production load.

The canary process begins with deploying the new version alongside the existing production version, similar to blue-green deployment setup. However, instead of complete traffic switching, canary deployment implements traffic splitting mechanisms that route a small percentage of requests to the new version. This gradual approach allows monitoring of real-world performance before broader exposure.

Traffic splitting strategies require careful consideration of user experience and data consistency. Random percentage-based splitting distributes load evenly but may impact user session continuity. User attribute-based routing—targeting specific user segments or geographic regions—provides more controlled exposure but requires application awareness of routing decisions.

Success metrics and monitoring form the foundation of effective canary deployment. Application performance indicators like response time, error rates, and resource utilization provide technical validation. Business metrics including conversion rates, user engagement, and customer satisfaction scores offer broader impact assessment. Monitoring provides the data needed to make informed decisions about progressive rollout expansion.

Automated rollback triggers protect against canary deployment issues. Threshold-based alerts monitoring error rates, performance degradation, or business metric declines can automatically halt traffic increases or revert to the previous version. These safety mechanisms prevent minor issues from becoming major incidents through automated response.

Progressive rollout schedules balance risk management with deployment speed. Starting with 1% traffic exposure and doubling incrementally (2%, 5%, 10%, 25%, 50%, 100%) provides measured expansion with sufficient monitoring time between stages. Faster schedules work for low-risk changes while conservative approaches suit critical system updates.

A/B testing integration with canary deployments extends beyond operational validation to include feature effectiveness evaluation. This combination enables organizations to assess both technical stability and business impact before full deployment commitment.

Implementation tools like Argo Rollouts, Flagger, and service mesh solutions provide automation frameworks for canary deployment management, handling traffic splitting, monitoring integration, and rollback automation.

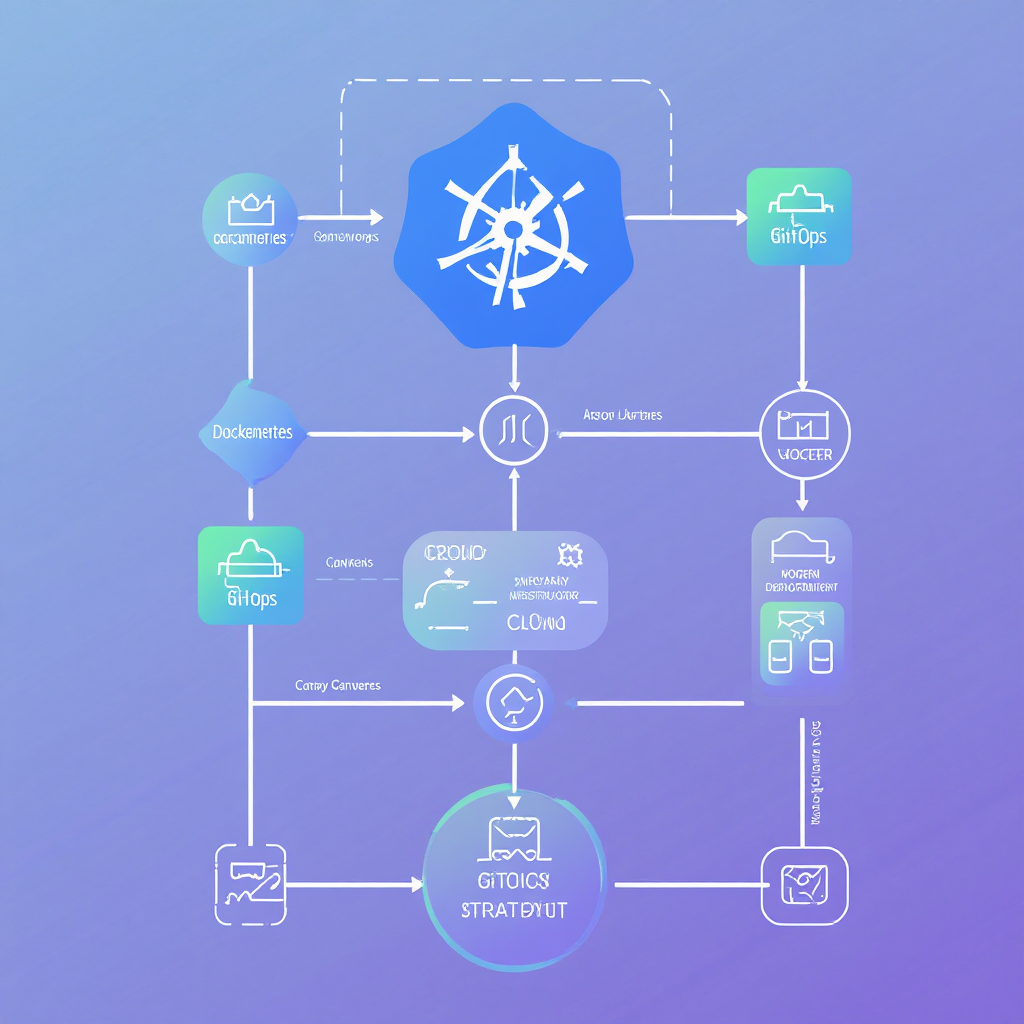

How to Implement GitOps with Argo CD?

GitOps with Argo CD uses Git repositories as the single source of truth for application configurations, automatically syncing deployed applications with their Git-defined desired state through continuous monitoring and reconciliation. Argo CD provides a web UI for visualizing deployments, managing multiple clusters, and implementing advanced deployment strategies.

The GitOps workflow starts with repository structure organization. Application manifests, Helm charts, or Kustomize configurations reside in Git repositories with clear branching strategies separating development, staging, and production environments. This approach builds on the same principles covered in our infrastructure as code strategy guide, extending version control benefits to application deployment configurations. Argo CD supports Kubernetes manifests, Kustomize, and Helm, providing flexibility in configuration management approaches.

Argo CD installation begins with cluster deployment using kubectl or Helm charts. The installation creates necessary namespaces, RBAC configurations, and custom resource definitions required for GitOps operations. Initial configuration includes Git repository connections, authentication setup, and application project definitions that organize deployment permissions and policies.

Application configuration within Argo CD defines the relationship between Git repositories and Kubernetes clusters. Applications specify source repository locations, target clusters, namespaces, and sync policies determining automatic or manual deployment triggers. Multi-cluster management capabilities enable centralized control across development, staging, and production environments from a single Argo CD instance.

The web-based user interface makes managing and monitoring deployments easy through visual representation of application states, sync status, and resource health. The interface provides drill-down capabilities for troubleshooting, manual sync triggering, and rollback operations when needed.

RBAC integration provides enterprise-grade security through native SSO and internal RBAC supporting integration with organizational authentication systems. Projects for logical isolation enable team-based access control while maintaining centralized oversight and governance.

CI/CD pipeline integration connects traditional build processes with GitOps deployment workflows. Build pipelines create container images and update Git repositories with new image tags or configuration changes, triggering Argo CD sync operations that deploy updates to target environments.

Advanced deployment strategies including Canary, Blue-Green, and Progressive delivery integrate with Argo CD through Argo Rollouts, extending basic GitOps capabilities with sophisticated deployment patterns and automated rollback mechanisms.

What Are Container Cost Optimization Strategies?

Container cost optimization focuses on rightsizing resources through monitoring-based recommendations, implementing horizontal pod autoscaling, using spot instances for non-critical workloads, and optimizing image sizes and resource requests. Operational cost analysis should focus on efficiency measures as they impact the total cost of ownership, with effective strategies reducing infrastructure costs by 30-60% while maintaining performance and availability.

Resource rightsizing begins with understanding actual resource utilization versus requested resources. Auto-scaling based on workload needs can help save resources, but requires accurate baseline measurements and performance monitoring to establish appropriate scaling thresholds. Many organizations discover significant over-provisioning when implementing usage-based monitoring.

Server virtualization enables improved server utilization by consolidating several underutilized servers onto fewer physical machines, maximizing resource efficiency while reducing data center space requirements. Container orchestration extends this concept through dynamic resource allocation, allowing multiple applications to share infrastructure resources more effectively than traditional deployment models.

Horizontal pod autoscaling provides dynamic resource adjustment based on metrics like CPU utilization, memory usage, or custom business metrics. Configuration requires defining minimum and maximum replica counts, target utilization thresholds, and scaling policies that balance responsiveness with stability. Vertical pod autoscaling complements horizontal scaling by adjusting individual container resource requests based on observed usage patterns.

Cloud provider cost optimization strategies include spot instance utilization for fault-tolerant workloads. Spot instances provide significant cost savings—often 60-80% less than on-demand pricing—for workloads that can tolerate interruptions. Batch processing, development environments, and stateless applications benefit from spot instance adoption.

Container image optimization reduces storage costs and deployment times through multi-stage builds, minimal base images, and layer caching strategies. Alpine Linux base images provide smaller footprints than full distributions while maintaining necessary functionality. Image scanning and vulnerability management provide additional security benefits while reducing compliance costs.

Monitoring and alerting for cost anomalies prevent budget overruns through automated notifications when spending exceeds thresholds or usage patterns change unexpectedly. Tools like Kubernetes Resource Recommender analyze historical usage to suggest optimal resource requests, reducing waste while maintaining performance guarantees.

The goal is to optimize the balance between performance requirements and cost savings to ensure long-term financial sustainability through data-driven resource allocation decisions rather than static over-provisioning approaches.

How to Monitor and Scale Containerized Applications?

Container monitoring requires observability across infrastructure, applications, and business metrics using tools like Prometheus for metrics collection, Grafana for visualization, and distributed tracing for request flows. Monitoring is essential because “what you can’t see in the runtime layer can’t be fixed”, making comprehensive observability a fundamental requirement rather than an operational nice-to-have.

The observability foundation rests on three pillars: metrics, logs, and traces. Metrics provide quantitative measurements of system behavior including CPU usage, memory consumption, request rates, and error percentages. Logs capture discrete events and error messages providing troubleshooting context. Distributed tracing follows requests across multiple services, revealing performance bottlenecks and dependency relationships in complex microservice architectures.

Prometheus and Grafana setup provides the most common open-source monitoring stack for Kubernetes environments. Prometheus collects time-series metrics from applications and infrastructure components through HTTP endpoints or push gateways. Grafana creates visual dashboards combining multiple data sources with alerting capabilities that notify teams of threshold violations or anomalous behavior.

Application performance monitoring extends beyond infrastructure metrics to include business-relevant indicators. Response time percentiles reveal user experience quality while error rates indicate application stability. Custom metrics tracking business events—user registrations, transaction volumes, feature usage—provide correlation between technical performance and business outcomes.

Horizontal pod autoscaling with custom metrics enables business-driven scaling decisions beyond simple CPU thresholds. Queue lengths, active user sessions, or processing backlogs provide more relevant scaling triggers for many applications than resource utilization alone. Custom metrics require additional configuration but provide superior scaling behavior alignment with business needs.

Alerting strategies balance notification relevance with team productivity. Alert fatigue occurs when teams receive too many notifications, leading to important alerts being ignored. Effective alerting uses escalation policies, alert grouping, and threshold tuning to ensure critical issues receive immediate attention while minor fluctuations remain logged without generating notifications.

Capacity planning combines historical usage analysis with growth projections to anticipate resource requirements. Monitoring tools provide telemetry on everything from CPU spikes to traffic anomalies, enabling data-driven decisions about cluster sizing, auto-scaling configuration, and infrastructure investments.

Commercial monitoring solutions like Datadog or Splunk provide comprehensive capabilities for organizations preferring managed services over self-hosted monitoring infrastructure. These platforms offer advanced analytics, machine learning-based anomaly detection, and enterprise support while reducing operational overhead.

FAQ

What’s the difference between containers and container orchestration?

Containers package applications and dependencies into portable units that run consistently across different environments. Container orchestration manages multiple containers across hosts, handling deployment, scaling, networking, and service discovery automatically. Think of containers as individual shipping containers and orchestration as the port management system that coordinates their movement, storage, and distribution.

Can small teams benefit from Kubernetes or is it overkill?

Small teams can benefit from managed Kubernetes services like EKS, GKE, or AKS that reduce operational overhead while providing enterprise-grade features. These services handle cluster management, upgrades, and security patches, allowing small teams to focus on application development rather than infrastructure operations. The investment pays off when applications need scaling or the team expects growth.

How much downtime is typical during container deployments?

Zero downtime is achievable with proper deployment strategies like blue-green or canary deployments, while traditional stop-and-start approaches may require 5-15 minutes of downtime. The key is implementing health checks, load balancer integration, and gradual traffic switching rather than simultaneous container replacement.

What’s the learning curve for implementing GitOps?

GitOps requires 2-4 weeks for basic implementation with tools like Argo CD, plus additional time for advanced features like multi-cluster management. Teams familiar with Git workflows adapt faster, while those new to infrastructure-as-code concepts need more preparation. The investment reduces operational complexity long-term through automated deployments and consistent environment management.

How do I handle persistent data during blue-green deployments?

Use external data stores like RDS or managed databases that both blue and green environments can access, or implement data migration strategies with read-only modes during transitions. Database changes require backward-compatible migrations and feature flags to ensure both application versions function correctly during deployment periods.

What monitoring tools are essential for container orchestration?

Essential tools include Prometheus for metrics collection, Grafana for visualization, and logging solutions like ELK stack or Loki for troubleshooting. Commercial alternatives include Datadog, New Relic, or Splunk for comprehensive monitoring with managed services. The choice depends on team preferences, budget, and integration requirements with existing tooling.

How do canary deployments handle database schema changes?

Database changes require backward-compatible migrations that support both old and new application versions simultaneously. Use feature flags to control new functionality activation and deploy schema changes separately from application updates. This approach ensures both versions can operate during the canary period without data consistency issues.

What’s the cost difference between self-managed and cloud-managed Kubernetes?

Managed services cost 10-20% more in direct fees but reduce operational overhead by 60-80%, making them cost-effective when considering total ownership costs including personnel time, training, and maintenance. Self-managed clusters require dedicated expertise and ongoing operational investment that often exceeds managed service premiums.

How do I secure containerized applications in production?

Security requires image scanning for vulnerabilities, network policies restricting inter-service communication, RBAC implementation for access control, and secrets management for sensitive data. Regular security updates, minimal container privileges, and monitoring for unusual behavior provide comprehensive protection against common attack vectors.

Can I use multiple deployment strategies simultaneously?

Different services can use different strategies based on their risk profiles and requirements. Advanced tools like Argo Rollouts support combining strategies—using canary for initial validation followed by blue-green for final deployment. This approach maximizes safety for critical services while maintaining deployment velocity for lower-risk components.

Making Container Orchestration Work for Your Organization

Container orchestration transforms application deployment from manual, error-prone processes into automated, reliable workflows that scale with organizational growth. The strategies covered in this guide—from choosing between Kubernetes and Docker Swarm to implementing blue-green deployments and GitOps workflows—provide the foundation for modern application delivery.

Success requires matching deployment strategies to application requirements and organizational capabilities. Start with simple approaches like rolling deployments for non-critical applications, then advance to blue-green or canary strategies for mission-critical services. GitOps implementation with Argo CD provides the operational foundation for scalable, auditable deployments across multiple environments.

Cost optimization through monitoring, auto-scaling, and resource rightsizing ensures container orchestration delivers business value beyond operational efficiency. The investment in comprehensive observability pays dividends through reduced troubleshooting time, improved performance insights, and data-driven capacity planning.

Begin your container orchestration journey by assessing current deployment practices, identifying pain points, and selecting initial use cases that provide clear business value. The transition to modern deployment strategies requires investment in tooling, training, and process changes, but the results—reduced downtime, faster deployments, and improved scalability—justify the effort for organizations committed to digital transformation. For a comprehensive view of how container orchestration fits into your broader DevOps automation strategy, explore our complete DevOps automation guide.