In today’s microservices landscape, managing multiple backend services whilst maintaining seamless client experiences creates significant complexity. Direct client-to-service communication leads to tight coupling, complex networking, security vulnerabilities, and duplicated cross-cutting concerns. The API Gateway pattern provides a strategic architectural solution—a unified entry point that simplifies client interactions whilst centralising essential infrastructure concerns.

This guide examines core principles, architectural considerations, and implementation strategies to inform strategic decisions that impact system resilience, team productivity, and long-term architectural evolution.

What Is the API Gateway Pattern?

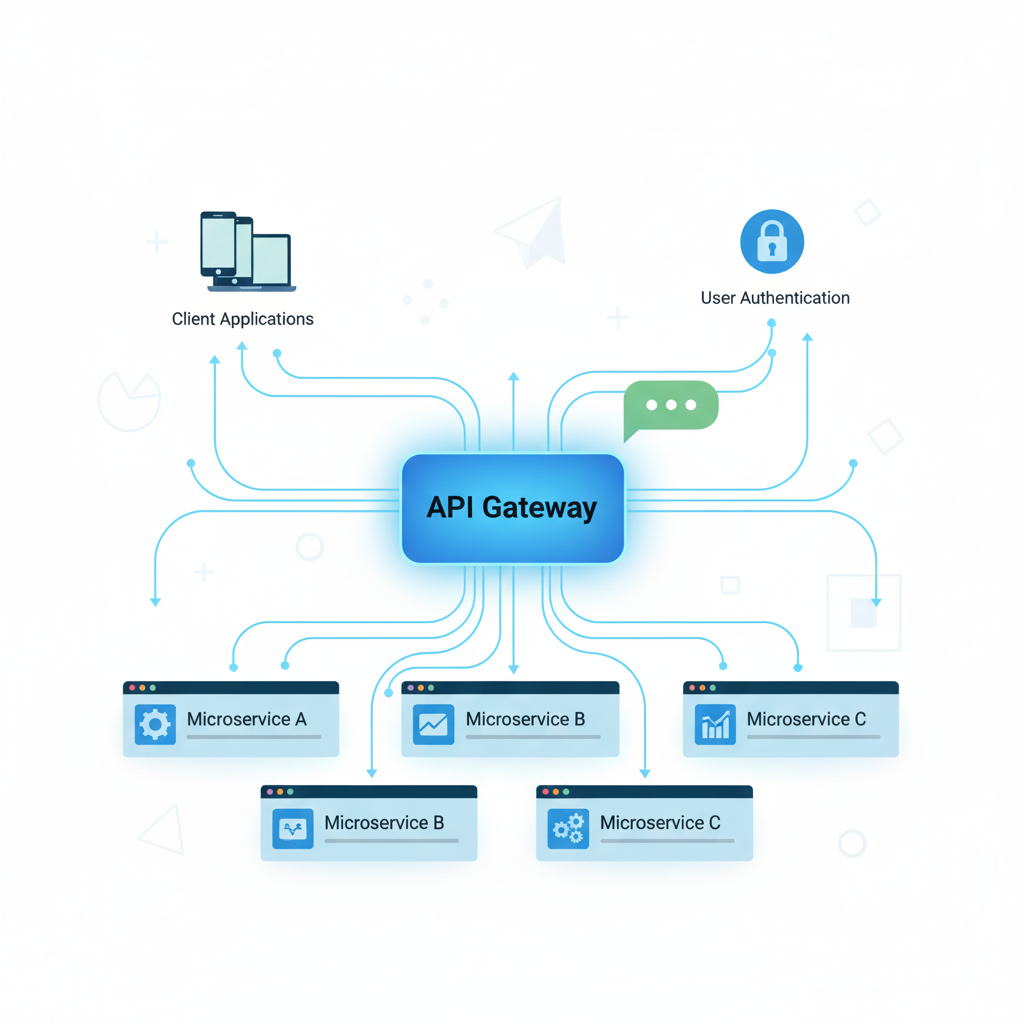

The API Gateway pattern is a microservices architectural approach that provides a single entry point for all client applications to access multiple backend services. It acts as a reverse proxy with intelligent routing capabilities, managing cross-cutting concerns like authentication, rate limiting, and protocol translation whilst decoupling clients from microservices complexity.

The gateway serves as an architectural facade that abstracts microservices complexity from client applications, presenting a unified interface rather than requiring clients to understand multiple service endpoints, protocols, and authentication mechanisms.

When clients communicate directly with multiple microservices, they become tightly coupled to internal implementations, creating maintenance burdens and deployment dependencies. The gateway eliminates this coupling by serving as an intermediary that can evolve service implementations without impacting clients.

Netflix pioneered this approach with Zuul, routing requests from diverse client devices through a centralised gateway to backend microservices. This enabled them to tailor responses for different device capabilities whilst implementing retry logic and fallback mechanisms. By centralising API management, you can implement consistent security policies, monitoring strategies, and compliance requirements across your entire service ecosystem.

Core Architecture and Components

API Gateway core architecture consists of request routing engines, protocol translators, authentication modules, rate limiting services, and response aggregators. These components work together to process client requests, enforce policies, transform protocols, aggregate responses from multiple services, and return unified results to client applications.

The request routing engine forms the gateway’s core intelligence, directing requests to appropriate backend services based on URL patterns, headers, or custom logic whilst maintaining service registry integration for dynamic discovery and automatic load balancing.

Protocol translation bridges different communication protocols—whilst clients communicate via HTTP/REST, backend services might use gRPC or messaging queues. Authentication and authorisation modules centralise security enforcement, validating client credentials and integrating with identity providers supporting OAuth 2.0, JWT tokens, and API keys.

Rate limiting protects backend services from abuse using algorithms including token bucket and sliding window, enforcing different limits based on client types or user tiers. Response aggregation combines responses from multiple backend services into single client responses, reducing client requests and minimising network roundtrips.

API Gateway vs Load Balancer vs Reverse Proxy

API Gateways extend reverse proxy functionality with microservices-specific capabilities like request aggregation, protocol translation, and API management. Load balancers distribute traffic across service instances for availability, whilst reverse proxies forward requests and cache responses. API Gateways combine both functions plus advanced features for API lifecycle management and business logic routing.

Load balancers distribute traffic across server instances using algorithms like round-robin, operating primarily at the network level without application-level awareness. Reverse proxies forward client requests to backend servers whilst providing caching and SSL termination, but with limited understanding of API contracts.

API Gateways build upon both capabilities whilst adding microservices intelligence—understanding API contracts, aggregating responses from multiple services, and implementing sophisticated content-based routing. These components work synergistically: API Gateways handle internet-facing authentication and routing, whilst load balancers distribute traffic across microservice instances for high availability.

Key Features and Capabilities Design

Essential API Gateway capabilities include request routing based on URL patterns, headers, or parameters; authentication and authorisation management; rate limiting and throttling; request/response transformation; service discovery integration; circuit breaker implementation; caching strategies; SSL termination; logging and monitoring; and API versioning support for enterprise-scale microservices architectures.

Request routing enables intelligent traffic direction through path-based routing (/users/* to user service), header-based routing (mobile clients to optimised endpoints), and parameter-based routing for API versioning.

Authentication capabilities centralise security by integrating with enterprise identity providers, supporting SAML, OAuth 2.0, and OpenID Connect whilst validating JWT tokens and enforcing role-based access. Rate limiting protects backend services using token bucket and sliding window algorithms, enforcing different limits based on client authentication or API tiers.

Request and response transformation enables protocol mediation—gateways can transform REST to GraphQL or convert JSON to XML without requiring client changes. Service discovery integration with registries like Consul or Kubernetes enables dynamic routing to available instances, automatically updating routing tables as services scale.

Circuit breakers protect against cascading failures by preventing requests from reaching services with high error rates, returning cached responses or fallback data instead.

Performance and Scalability Considerations

API Gateway performance depends on routing efficiency, caching strategies, connection pooling, and horizontal scaling capabilities. Critical factors include latency overhead, throughput limits, memory management, and CPU utilisation. Proper load balancing, circuit breaker implementation, and geographic distribution ensure optimal performance under varying traffic loads and service availability conditions.

The additional network hop creates latency overhead, but strategic caching can reduce overall latency by serving frequently accessed responses directly from the gateway. Horizontal scaling enables enterprise-scale traffic handling through cluster deployments with automatic load balancing across instances.

Event-driven, reactive architectures using Netty, Spring Reactor, or Node.js provide superior performance for I/O-intensive workloads, handling thousands of concurrent connections efficiently. Connection pooling optimises gateway-to-backend communication by maintaining persistent connections to frequently accessed services.

Caching strategies provide significant performance improvements through time-based expiration, content-based invalidation, and client-specific cache segmentation.

Security Architecture in API Gateways

API Gateway security architecture implements defense-in-depth strategies through centralised authentication, authorisation enforcement, SSL termination, API key management, and threat protection. Security features include OAuth 2.0 integration, JWT token validation, DDoS protection, input validation, rate limiting for abuse prevention, and comprehensive audit logging for compliance requirements.

Centralising security at the gateway level ensures consistent policy application whilst reducing the security burden on development teams. SSL termination handles encryption and certificate management for all client communications, simplifying certificate lifecycle management.

OAuth 2.0 and OpenID Connect integration enables modern authentication workflows, validating access tokens and enforcing scopes whilst supporting various flows for web applications, mobile apps, and IoT devices. Input validation protects backend services through comprehensive schema validation, content type enforcement, and payload size limits.

DDoS protection and rate limiting defend against attacks by distinguishing between legitimate traffic spikes and malicious patterns.

When to Use API Gateway Pattern

Use API Gateway pattern when managing multiple microservices with diverse client applications, requiring centralised cross-cutting concerns management, implementing complex routing logic, or needing protocol translation capabilities. Ideal for organisations with distributed teams, varying service technologies, external API exposure requirements, and enterprise security and compliance mandates.

The decision should be driven by specific architectural challenges: tight coupling between clients and microservices, duplicated cross-cutting concerns, or complex client integration requirements.

Multiple client types create compelling use cases—when supporting web applications, mobile apps, and third-party integrations that require different data formats and authentication mechanisms, the gateway can present client-optimised interfaces whilst maintaining consistent backend implementations.

Complex routing requirements justify implementation when simple load balancing proves insufficient. Content-based routing, A/B testing, or gradual migration strategies benefit from intelligent routing capabilities. External API exposure creates security requirements that align well with centralised authentication, rate limiting, and monitoring.

Implementation Options and Resources

API Gateway implementation options include commercial solutions like Kong, AWS API Gateway, Azure API Management; open-source alternatives like Spring Cloud Gateway, Traefik, KrakenD; and custom-built solutions. Selection criteria include feature requirements, scalability needs, budget constraints, existing technology stack integration, vendor support quality, and long-term maintenance considerations for enterprise architectures.

Commercial solutions like AWS API Gateway and Azure API Management provide comprehensive features with enterprise support but involve licensing costs. Kong offers both open-source and enterprise versions with extensive plugin ecosystems and high performance.

Open-source alternatives like Spring Cloud Gateway provide excellent Spring ecosystem integration, whilst Traefik offers automatic service discovery and cloud-native deployment. KrakenD focuses on high-performance scenarios with configuration-driven approaches and stateless design.

Selection criteria should evaluate routing capabilities, authentication integration, rate limiting, monitoring, scalability characteristics, and deployment complexity against specific requirements.

Frequently Asked Questions

1. How does API Gateway pattern differ from traditional reverse proxy solutions?

API Gateways extend reverse proxy functionality with microservices-specific features like request aggregation, service discovery integration, API lifecycle management, and advanced routing based on business logic rather than just network-level forwarding.

2. What are the main disadvantages of implementing API Gateway pattern?

Potential disadvantages include single point of failure risks, increased latency overhead, operational complexity, vendor lock-in concerns, and the need for specialised expertise to manage and optimise gateway performance effectively.

3. Can API Gateway pattern work with existing monolithic applications?

Yes, API Gateway pattern can facilitate gradual migration from monolithic to microservices architecture through the Strangler Fig pattern, where new services are gradually introduced whilst maintaining backwards compatibility with legacy systems.

4. How do API Gateways handle service discovery and dynamic routing?

API Gateways integrate with service registries like Consul, Eureka, or Kubernetes service discovery to automatically route requests to available service instances, implement health checks, and manage load balancing across dynamic service topologies.

5. What is the Backend for Frontend (BFF) pattern and when should it be used?

BFF pattern creates separate API Gateway instances optimised for specific client types (mobile, web, IoT). Use BFF when different clients require significantly different data structures, security requirements, or performance characteristics.

6. How do you handle API versioning through an API Gateway?

API Gateways support versioning through URL path routing (/v1/users, /v2/users), header-based routing, or subdomain routing. Implement gradual migration strategies and maintain backwards compatibility during version transitions.

7. What are the security implications of centralising API management?

Centralised API management creates a critical security boundary requiring robust authentication, authorisation, audit logging, and DDoS protection. Benefits include consistent security policy enforcement and simplified certificate management across services.

8. How do API Gateways impact microservices independence and deployment?

Properly configured API Gateways maintain service independence through loose coupling and contract-based interfaces. Avoid creating tight dependencies by implementing proper versioning and backward compatibility strategies.

9. What monitoring and observability features should API Gateways provide?

Essential monitoring includes request/response metrics, error rates, latency percentiles, service health checks, security events, rate limiting violations, and distributed tracing capabilities for comprehensive system visibility.

10. How do you migrate from direct service communication to API Gateway pattern?

Implement gradual migration using proxy patterns, maintain dual communication paths during transition, establish comprehensive testing strategies, and ensure fallback mechanisms to minimise service disruption during migration phases.

11. What are the cost implications of different API Gateway solutions?

Costs vary significantly between solutions: open-source options require internal expertise and maintenance; cloud-managed services charge per request or connection; commercial solutions involve licensing fees plus operational overhead.

12. How do API Gateways integrate with service mesh architectures?

API Gateways typically handle north-south traffic (client-to-service) whilst service meshes manage east-west traffic (service-to-service). They complement each other in comprehensive microservices infrastructure, often sharing similar proxy technologies like Envoy.

Conclusion

The API Gateway pattern represents a fundamental architectural approach for managing microservices complexity whilst providing scalable, secure, and maintainable client interfaces. Successful implementation requires careful consideration of performance implications, security requirements, and operational complexity alongside business requirements and organisational capabilities.

For a comprehensive overview of how the API Gateway pattern fits within the broader microservices ecosystem, explore our Complete Guide to Microservices Design Patterns, which covers essential patterns including Circuit Breaker, Service Mesh, and Event Sourcing architectures.